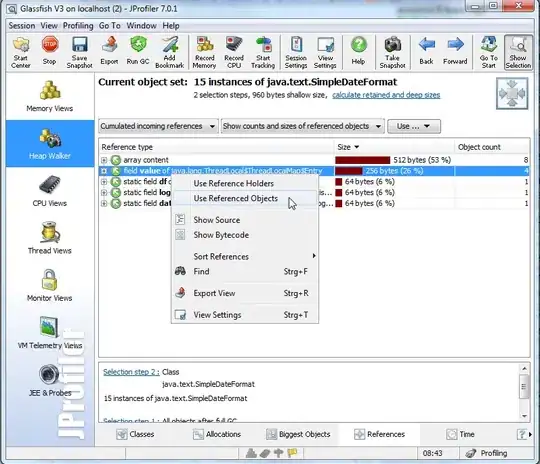

Somebody messed up our robots.txt by accidentally adding \n after our entire allow: /products/ which are about 30.000 pages in total. The errors are on multiple language sites. This is one of our Search consoles.

I quickly noticed the error and deleted it. I've asked Google to verify my solution but about 3 months later the errors are still increasing. See the images below:

Is there anything I can do to speed up the proces? I have started the validation.