Tracking down a performance problem (micro I know) I end with this test program. Compiled with the framework 4.5 and Release mode it tooks on my machine around 10ms.

What bothers me if that if I remove this line

public int[] value1 = new int[80];

times get closer to 2 ms. It seems that there is some memory fragmentation problem but I failed to explain the why. I have tested the program with Net Core 2.0 with same results. Can anyone explain this behaviour?

using System;

using System.Collections.Generic;

using System.Diagnostics;

namespace ConsoleApp4

{

public class MyObject

{

public int value = 1;

public int[] value1 = new int[80];

}

class Program

{

static void Main(string[] args)

{

var list = new List<MyObject>();

for (int i = 0; i < 500000; i++)

{

list.Add(new MyObject());

}

long total = 0;

for (int i = 0; i < 200; i++)

{

int counter = 0;

Stopwatch timer = Stopwatch.StartNew();

foreach (var obj in list)

{

if (obj.value == 1)

counter++;

}

timer.Stop();

total += timer.ElapsedMilliseconds;

}

Console.WriteLine(total / 200);

Console.ReadKey();

}

}

}

UPDATE:

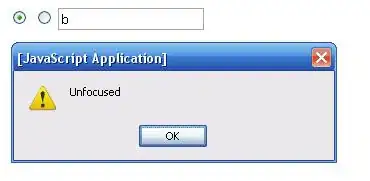

After some research I came to the conclusion that it's just the processor cache access time. Using the VS profiler, the cache misses seem to be a lot higher

- Without array

- With array