I have 3 rather large files (67gb, 36gb, 30gb) that I need to train models on. However, the features are rows and the samples are columns. Since Dask hasn't implemented transpose and stores DataFrames split by row, I need to write something to do this myself. Is there a way I can efficiently transpose without loading into memory?

I've got 16 gb of ram at my disposal and am using jupyter notebook. I have written some rather slow code, but would really appreciate a faster solution. The speed of the code below will take a month to finish all the files. The slowest step by a few orders of magnitude is awk.

import dask.dataframe as dd

import subprocess

from IPython.display import clear_output

df = dd.read_csv('~/VeryLarge.tsv')

with open('output.csv','wb') as fout:

for i in range(1, len(df.columns)+1):

print('AWKing')

#read a column from the original data and store it elsewhere

x = "awk '{print $"+str(i)+"}' ~/VeryLarge.tsv > ~/file.temp"

subprocess.check_call([x], shell=True)

print('Reading')

#load and transpose the column

col = pd.read_csv('~/file.temp')

row = col.T

display(row)

print('Deleting')

#remove the temporary file created

!rm ../file.temp

print('Storing')

#store the row in its own csv just to be safe. not entirely necessary

row.to_csv('~/columns/col_{:09d}'.format(i), header=False)

print('Appending')

#append the row (transposed column) to the new file

with open('~/columns/col_{:09d}', 'rb') as fin:

for line in fin:

fout.write(line)

clear_output()

#Just a measure of progress

print(i/len(df.columns))

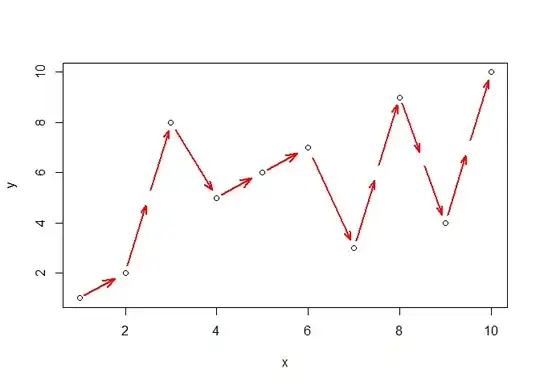

The data itself is 10million rows (features) and 2000 columns (samples). It just needs to be transposed. Currently, it looks like this: