Disclaimer: I did though about posting to math stack-exchange or some kind. But I heard from my math-major friends that they don't really use Einstein Summation a lot, but I do know machine learning uses a lot of that. Therefore I posted this problem here (as optimizing algorithm performance).

When doing research about matrix computation (e.g. how many element wise multiplications are at least needed), I was trying to compute the following gradient:

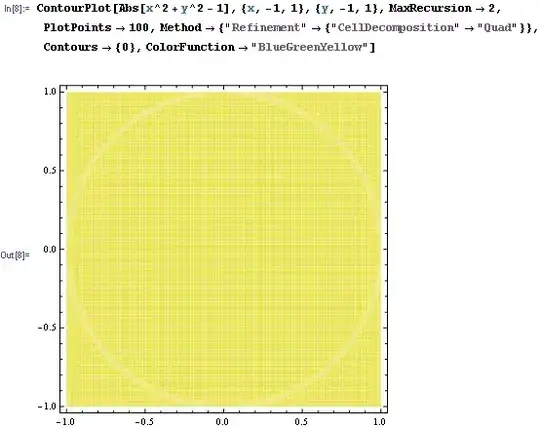

where ABC means contract three matrices on their first axis (e.g. 2x3, 2x4, and 2x5 becomes 3x4x5 with the 2-axis summed over). Basically, it computes the gradient of norm of 3-matrix-contraction ABC with respect to A. Then computes the norm of that gradient with respect to A again.

This is equivalent to:

or simplify a little bit (proven by autograd):

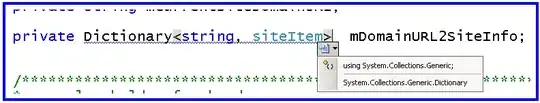

We can write this in Einstein Summation form (used by einsum function of a lot of packages such as numpy, tensorflow and etc.)

np.einsum('ia,ib,ic,jb,jc,jd,je,kd,ke->ka', A, B, C, B, C, B, C, B, C)

When writing this, I find matrices B and C are kind of repeating again and again in the summation. I'm wondering can I simplify those "lots of B and Cs" to matrix power of some kind? That should make things logarithmic-ly faster. I tried manually simplifying but didn't figure out.

Thanks a lot! Please correct me if something I'm saying is not correct.