I'm using Scrapy library to scrape a website where people selling cars.

I'm using Python and IBM Cloud functions and Scrapy to achieve that. The idea is to scrape the site every day with IBM Cloud actions and add every vehicle to the vehicles table in Postgres database. That part works fine.

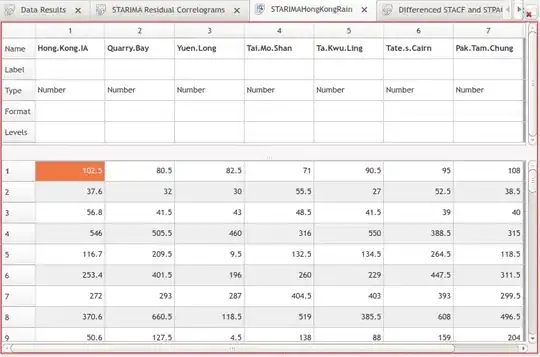

The structure of the vehicles table is as follows:

In the first step is everything except data column (that are the details of the car and those need to be added in the second step) added to vehicles table. That works fine.

The second step is to check every day for every added vehicle from vehicles table if it still exists on the site (it can be deleted or sold). In this step I add every looped vehicle to daily_run_vehicle table. The structure of daily_run_vehicle is as follows:

If the vehicle exists I scrape the details and update vehicles table data column and set handled column to TRUE in daily_run_vehicle table. If it's sold or deleted, then I increment retries column in daily_run_vehicle table.

The second step should run every day.

First I loop through every vehicle from vehicles for which the handled column in daily_run_vehicle table is not TRUE or if handled is False but the number of retries is 5 or more. And for every iteration I add a new record to the daily_run_vehicle table.

The action is prepare-get-vehicles and the code is as follows:

import json

import requests

from common.db import add_logs, get_vehicle_references

from common.db import capture_error

from common.common import APIHOST, NAMESPACE, USER_PASS

def execute_reference(reference, reference_url):

action = "prepare-get-vehicle"

url = APIHOST + "/api/v1/namespaces/" + NAMESPACE + "/actions/" + action

response = requests.post(url,

data=json.dumps({"reference": reference, 'reference_url': reference_url}),

params={"blocking": "false"},

auth=(USER_PASS[0], USER_PASS[1]),

headers={"Content-Type": "application/json"})

print(response.json())

def main(params):

try:

for reference in get_vehicle_references():

execute_reference(reference[0], reference[1])

return {"Success": "prepare-get-vehicles action executed successfully."}

except Exception as e:

capture_error(str(e))

return {"Failure": "prepare-get-vehicles action NOT executed successfully."}

The get_vehicle_references function is as follows:

def get_vehicle_references():

conn = db_connection()

cur = conn.cursor()

try:

s = "SELECT reference, reference_url FROM vehicles v WHERE (NOT EXISTS (select reference from daily_run_vehicle WHERE (handled = %s or (handled = %s and retries >= %s)) AND reference = v.reference))"

cur.execute(s, (True, False, 5))

return cur.fetchall()

except Exception as e:

capture_error(str(e))

conn.close()

prepare-get-vehicle action does nothing except adding new record to daily_run_vehicle table and it is as follows:

def main(params):

try:

insert_daily_run_vehicle(params.get("reference", None), params.get("reference_url", None))

return {"Success.": "The DB filler (daily_run_vehicle) is successfully executed."}

except Exception as e:

capture_error(str(e))

return {"Failure": "The DB filler (daily_run_vehicle) action NOT executed successfully."}

But the problem is that vehicles table has more than 300k records and it becomes bigger and bigger every day. Than the for loop in prepare-get-vehicles action takes to much time to execute on IBM Cloud. There is timeout 600s but the for loop takes much more time.

Any advice how I can solve my problem and how I can loop through a table which has more than 300k record and for every record add new row to daily_run_table?

Thanks in advance.