I am using Vulkan with GLSL compute shaders to do post processing on a texture rendered in OpenGL. It works fine for the most part, but when the vertical resolution of the texture is within certain ranges, I see very weird anomalies where gl_GlobalInvocationID seems to be messed up.

An example compute shader that demonstrates this looks like

#version 450

#extension GL_ARB_separate_shader_objects : enable

#define WORKGROUP_SIZE 32

layout(local_size_x=WORKGROUP_SIZE, local_size_y=WORKGROUP_SIZE, local_size_z=1) in;

layout(binding=0, rgba8) uniform image2D input_tex;

layout(binding=1, rgba8) uniform image2D output_tex;

void main()

{

vec4 red = vec4(1.0, 0.0, 0.0, 1.0);

vec4 black = vec4(0.0, 0.0, 0.0, 1.0);

if(gl_GlobalInvocationID.x == 0 || gl_GlobalInvocationID.y == 0)

{

imageStore(output_tex, ivec2(gl_GlobalInvocationID.xy), red);

}

else

{

imageStore(output_tex, ivec2(gl_GlobalInvocationID.xy), black);

}

}

Depending on the vertical resolution, I get odd results: 482+, 241-256, 121-128, 55-64 work fine, but 257-481, 129-240, 65-121, <55 are incorrect. See below.

Vertical resolution 482 working:

Vertical resolution 480 broken:

I create the textures via:

vk::ImageCreateInfo image_create_info(

vk::ImageCreateFlags(),

vk::ImageType::e2D,

vk::Format::eR8G8B8A8Unorm,

{width, height, 1},

1,

1,

vk::SampleCountFlagBits::e1,

vk::ImageTiling::eOptimal,

vk::ImageUsageFlagBits::eColorAttachment |

vk::ImageUsageFlagBits::eSampled |

vk::ImageUsageFlagBits::eTransferSrc |

vk::ImageUsageFlagBits::eStorage

);

image = device.createImage(image_create_info);

vk::MemoryRequirements memory_requirements = device.getImageMemoryRequirements(image);

memory_size = memory_requirements.size;

vk::ExportMemoryAllocateInfo export_allocate_info(

vk::ExternalMemoryHandleTypeFlagBits::eOpaqueFd);

vk::MemoryAllocateInfo memory_allocate_info(

memory_size,

memory_type // Standard way of finding this using eDeviceLocal

);

memory_allocate_info.pNext = &export_allocate_info;

device_memory = device.allocateMemory(memory_allocate_info);

device.bindImageMemory(image, device_memory, 0);

vk::MemoryGetFdInfoKHR memory_info(device_memory, vk::ExternalMemoryHandleTypeFlagBits::eOpaqueFd);

opaque_handle = device.getMemoryFdKHR(memory_info, dynamic_loader);

// Create OpenGL texture

glCreateTextures(GL_TEXTURE_2D, 1, &opengl_tex);

glCreateMemoryObjectsEXT(1, &opengl_memory);

glImportMemoryFdEXT(opengl_memory, memory_size, GL_HANDLE_TYPE_OPAQUE_FD_EXT, opaque_handle);

glTextureStorageMem2DEXT(opengl_tex, 1, GL_RGBA8, width, height, opengl_memory, 0);

I render to a texture in OpenGL, then use glBlitFramebuffer(0, 0, width, height, 0, 0, width, height, GL_COLOR_BUFFER_BIT, GL_NEAREST) to copy the rendered image to opengl_tex from above and then execute the command buffer containing the above shader. After that, I blit the texture back and render it to the screen in OpenGL. (Using semaphores for synchronization).

The creation of the command buffer looks like

vk::CommandBufferBeginInfo begin_info(

vk::CommandBufferUsageFlagBits());

command_buffer.begin(begin_info);

command_buffer.bindPipeline(vk::PipelineBindPoint::eCompute, pipeline);

command_buffer.bindDescriptorSets(vk::PipelineBindPoint::eCompute, pipeline_layout, 0, 1, &descriptor_set, 0, nullptr);

command_buffer.dispatch((uint32_t)ceil(width/float(WORKGROUP_SIZE), (uint32_t)ceil(height/float(WORKGROUP_SIZE)), 1);

command_buffer.end();

I checked several times that I wasn't hard coding a size somewhere (besides workgroup size).

I also made a utility to read in a png image and execute the same shader and write out the output (bypassing the OpenGL interop) and that works regardless of dimension.

I have a requirement to be able to process a 480x480 image, so any help would be much appreciated. If there's any other info you need, I can provide more code.

(FYI I am using a GTX 1080 with NVidia driver 410.78 on Linux and Vulkan 1.1.85.0)

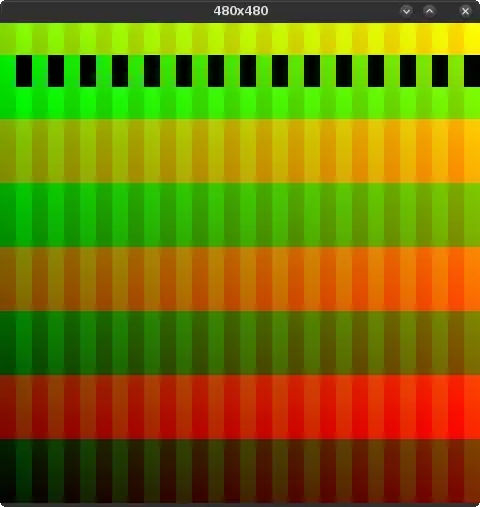

EDIT: Using the following shader snippet:

vec4 color = vec4(gl_GlobalInvocationID.x/480.0, gl_GlobalInvocationID.y/480.0, 0.0, 1.0);

imageStore(output_tex, ivec2(gl_GlobalInvocationID.xy), color);

I get the results

480x482 image working:

480x480 image broken: