I have a streaming job implemented on top of Apache Beam, which reads messages from Apache Kafka, processes them and outputs them into BigTable.

I would like to get throughput metrics of ingress/egress inside this job i.e. how many msg/sec the job is reading and how many msg/sec it's writing.

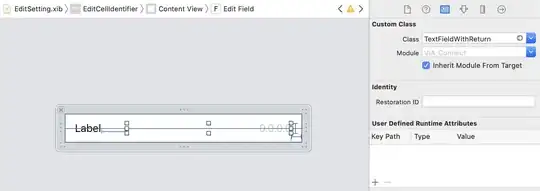

Looking at graph visualization I see that there is throughput metric e.g. take a look at below exemplary picture for demonstration

However looking at documentation it's not available on Stackdriver.

Is there any existing solution to get this metrics ?