I am trying to use cvxpy lib to solve a very simple least square problem. But I found that cvxpy gave me very different results when I use sum_squares and norm(x,2) as loss functions. The same happens when I try the l1 norm and sum of absolute values.

Does these differences come from mathematically the optimization problem definition, or the implementation of the library?

Here is my code example:

s = cvx.Variable(n)

constrains = [cvx.sum(s)==1 , s>=0, s<=1]

prob = cvx.Problem(cvx.Minimize(cvx.sum_squares(A * s - y)), constrains)

prob = cvx.Problem(cvx.Minimize(cvx.norm(A*s - y ,2)), constrains)

Both y and s are vectors representing histograms. y is the histogram of randomized data, and s is the original histogram that I want to recover. A is a n*m "transition" matrix of probabilities how s is randomized to y.

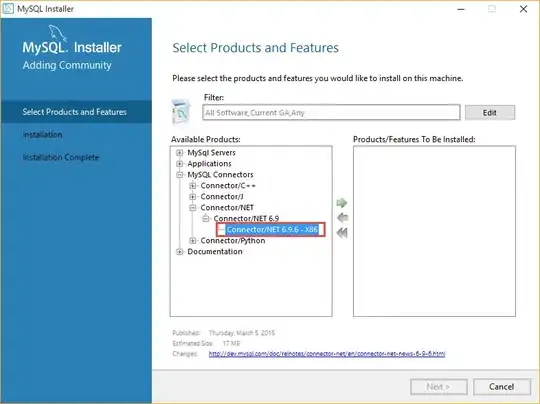

The following are the histograms of variable s: