I'm running a single (not scaled) solr instance on a Azure App Service. The App Service runs Java 8 and a Jetty 9.3 container.

Everything works really well, but when Azure decides to swap to another VM sometimes the JVM doesn't seem to shutdown gracefully and we encounter issues.

One of the reasons for Azure to decide to swap to another VM is infrastructure maintenance. For example Windows Updates are installed and your app is moved to another machine.

To prevent downtime Azure spins up the new app and when it's ready it will swap over to the new app. Seems fine, but this does not seem to work well with solr's locking mechanism.

We are using the default native lockType, which should be fine since we're only running a single instance. Solr should remove the write.lock file during shutdown, but this does not seem to happen all of the time.

The Azure Diagnostics tools clearly show this event happening:

And the memory usage shows both apps:

During the start of the second instance solr tries to lock the index, but this is not possible because the first one is still using it (it also has the write.lock file). Sometimes the first one doesn't remove the write.lock file and this is were the problems start.

The second solr instance will never work correctly without manual intervention (manually deleting the write.lock file).

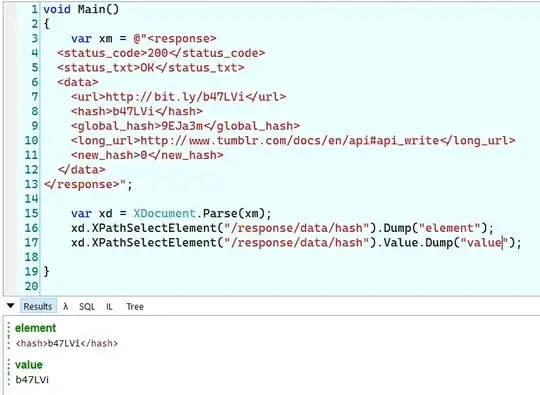

The solr logs:

Caused by: org.apache.solr.common.SolrException: Index dir 'D:\home\site\wwwroot\server\solr\****\data\index/' of core '*****' is already locked. The most likely cause is another Solr server (or another solr core in this server) also configured to use this directory; other possible causes may be specific to lockType: native

and

org.apache.lucene.store.LockObtainFailedException

What can be done about this?

I was thinking of changing the lockType to a memory-based lock, but I'm not sure if that would work because both instances are alive at the same time during a short period of time.