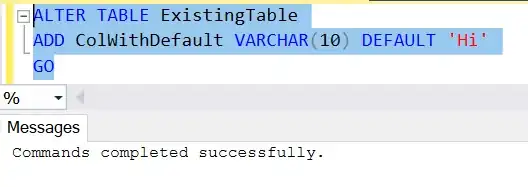

I have a Spark batch application running on a YARN cluster (in AWS EMR). When I read the input to the application from S3 and write the output also to S3, the application takes a lot of time (nearly 6 minutes). I am guessing that this happens because of latency issues when reading and writing to S3. To prove my guess right, I go to my spark event timeline to see what takes time. Here is the timeline:

There are huge blank spaces after my save function. I see that the save function finishes executing in around 10-15 seconds. Has it actually finished running and the executors are idle?

There are huge blank spaces after my save function. I see that the save function finishes executing in around 10-15 seconds. Has it actually finished running and the executors are idle?

To improve the runtime of my application, I did an experiment. I used HDFS instead of S3. I read the input to the application from HDFS and write the output also to HDFS. The application took 1.5 minutes only. The spark event timeline in this case looks like this:

The blank spaces have disappeared.

The blank spaces have disappeared.

- What are the blank spaces in my former event timeline?

- Why are some of the jobs (i.e. the blue boxes in the event timeline) evenly scattered vertically?

- Why is the foreach() job and save() job not scattered vertically?

- What does it mean by the jobs being scattered vertically?