We have been trying to set up a production level Kafka cluster in AWS Linux machines and till now we have been unsuccessful.

Kafka version: 2.1.0

Machines:

5 r5.xlarge machines for 5 Kafka brokers.

3 t2.medium zookeeper nodes

1 t2.medium node for schema-registry and related tools. (a Single instance of each)

1 m5.xlarge machine for Debezium.

Default broker configuration :

num.partitions=15

min.insync.replicas=1

group.max.session.timeout.ms=2000000

log.cleanup.policy=compact

default.replication.factor=3

zookeeper.session.timeout.ms=30000

Our problem is mainly related to huge data. We are trying to transfer our existing tables in kafka topics using debezium. Many of these tables are quite huge with over 50000000 rows.

Till now, we have tried many things but our cluster fails every time with one or more reasons.

ERROR Uncaught exception in scheduled task 'isr-expiration' (kafka.utils.KafkaScheduler) org.apache.zookeeper.KeeperException$SessionExpiredException: KeeperErrorCode = Session expired for /brokers/topics/__consumer_offsets/partitions/0/state at org.apache.zookeeper.KeeperException.create(KeeperException.java:130) at org.apache.zookeeper.KeeperException.create(KeeperException.java:54)..

Error 2:

] INFO [Partition xxx.public.driver_operation-14 broker=3] Cached zkVersion [21] not equal to that in zookeeper, skip updating ISR (kafka.cluster.Partition) [2018-12-12 14:07:26,551] INFO [Partition xxx.public.hub-14 broker=3] Shrinking ISR from 1,3 to 3 (kafka.cluster.Partition) [2018-12-12 14:07:26,556] INFO [Partition xxx.public.hub-14 broker=3] Cached zkVersion [3] not equal to that in zookeeper, skip updating ISR (kafka.cluster.Partition) [2018-12-12 14:07:26,556] INFO [Partition xxx.public.field_data_12_2018-7 broker=3] Shrinking ISR from 1,3 to 3 (kafka.cluster.Partition)

Error 3:

isolationLevel=READ_UNCOMMITTED, toForget=, metadata=(sessionId=888665879, epoch=INITIAL)) (kafka.server.ReplicaFetcherThread) java.io.IOException: Connection to 3 was disconnected before the response was read at org.apache.kafka.clients.NetworkClientUtils.sendAndReceive(NetworkClientUtils.java:97)

Some more errors :

- Frequent disconnections among broker which probably is the reason behind nonstop shrinking and expanding ISRs with no auto recovery.

- Schema registry gets timed out. I don't know how is schema registry even affected. I don't see too much load on that server. Am I missing something? Should I use a Load balancer for multiple instances of schema Registry as failover?. The topic __schemas has just 28 messages in it. The exact error message is RestClientException: Register operation timed out. Error code: 50002

Sometimes the message transfer rate is over 100000 messages per second, sometimes it drops to 2000 messages/second? message size could cause this?

In order to solve some of the above problems, we increased the number of brokers and increased zookeeper.session.timeout.ms=30000 but I am not sure if it actually solved the our problem and if it did, how?.

I have a few questions:

- Is our cluster good enough to handle this much data.

- Is there anything obvious that we are missing?

- How can I load test my setup before moving to the production level?

- What could cause the session timeouts between brokers and the schema registry.

- Best way to handle the schema registry problem.

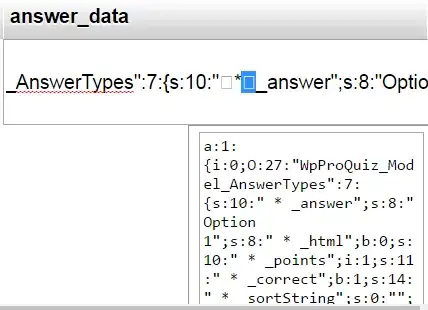

Network Load on one of our Brokers.

Feel free to ask for any more information.