In a self-educational project I measure the bandwidth of the memory with help of the following code (here paraphrased, the whole code follows at the end of the question):

unsigned int doit(const std::vector<unsigned int> &mem){

const size_t BLOCK_SIZE=16;

size_t n = mem.size();

unsigned int result=0;

for(size_t i=0;i<n;i+=BLOCK_SIZE){

result+=mem[i];

}

return result;

}

//... initialize mem, result and so on

int NITER = 200;

//... measure time of

for(int i=0;i<NITER;i++)

resul+=doit(mem)

BLOCK_SIZE is choosen in such a way, that a whole 64byte cache line is fetched per single integer-addition. My machine (an Intel-Broadwell) needs about 0.35 nanosecond per integer-addion, so the code above could saturate a bandwith as high as 182GB/s (this value is just an upper bound and is probably quite off, what is important is the ratio of bandwidths for different sizes). The code is compiled with g++ and -O3.

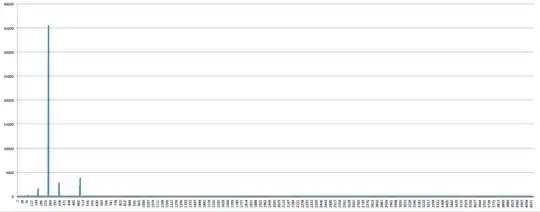

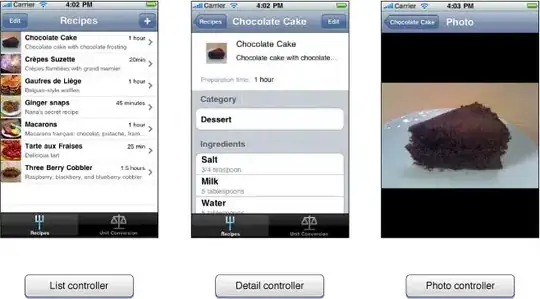

Varying the size of the vector, I can observe expected bandwidths for L1(*)-, L2-, L3-caches and the RAM-memory:

However, there is an effect I'm really struggling to explain: the collapse of the measured bandwidth of L1-cache for sizes around 2 kB, here in somewhat higher resolution:

I could reproduce the results on all machines I have access to (which have Intel-Broadwell and Intel-Haswell processors).

My question: What is the reason for the performance-collapse for memory-sizes around 2 KB?

(*) I hope I understand correctly, that for L1-cache not 64 bytes but only 4 bytes per addition are read/transfered (there is no further faster cache where a cache line must be filled), so the plotted bandwidth for L1 is only the upper limit and not the badwidth itself.

Edit: When the step size in the inner for-loop is chosen to be

- 8 (instead of 16) the collapse happens for 1KB

- 4 (instead of 16) the collapse happens for 0.5KB

i.e. when the inner loop consists of about 31-35 steps/reads. That means the collapse isn't due to the memory-size but due to the number of steps in the inner loop.

It can be explained with branch misses as shown in @user10605163's great answer.

Listing for reproducing the results

bandwidth.cpp:

#include <vector>

#include <chrono>

#include <iostream>

#include <algorithm>

//returns minimal time needed for one execution in seconds:

template<typename Fun>

double timeit(Fun&& stmt, int repeat, int number)

{

std::vector<double> times;

for(int i=0;i<repeat;i++){

auto begin = std::chrono::high_resolution_clock::now();

for(int i=0;i<number;i++){

stmt();

}

auto end = std::chrono::high_resolution_clock::now();

double time = std::chrono::duration_cast<std::chrono::nanoseconds>(end-begin).count()/1e9/number;

times.push_back(time);

}

return *std::min_element(times.begin(), times.end());

}

const int NITER=200;

const int NTRIES=5;

const size_t BLOCK_SIZE=16;

struct Worker{

std::vector<unsigned int> &mem;

size_t n;

unsigned int result;

void operator()(){

for(size_t i=0;i<n;i+=BLOCK_SIZE){

result+=mem[i];

}

}

Worker(std::vector<unsigned int> &mem_):

mem(mem_), n(mem.size()), result(1)

{}

};

double PREVENT_OPTIMIZATION=0.0;

double get_size_in_kB(int SIZE){

return SIZE*sizeof(int)/(1024.0);

}

double get_speed_in_GB_per_sec(int SIZE){

std::vector<unsigned int> vals(SIZE, 42);

Worker worker(vals);

double time=timeit(worker, NTRIES, NITER);

PREVENT_OPTIMIZATION+=worker.result;

return get_size_in_kB(SIZE)/(1024*1024)/time;

}

int main(){

int size=BLOCK_SIZE*16;

std::cout<<"size(kB),bandwidth(GB/s)\n";

while(size<10e3){

std::cout<<get_size_in_kB(size)<<","<<get_speed_in_GB_per_sec(size)<<"\n";

size=(static_cast<int>(size+BLOCK_SIZE)/BLOCK_SIZE)*BLOCK_SIZE;

}

//ensure that nothing is optimized away:

std::cerr<<"Sum: "<<PREVENT_OPTIMIZATION<<"\n";

}

create_report.py:

import sys

import pandas as pd

import matplotlib.pyplot as plt

input_file=sys.argv[1]

output_file=input_file[0:-3]+'png'

data=pd.read_csv(input_file)

labels=list(data)

plt.plot(data[labels[0]], data[labels[1]], label="my laptop")

plt.xlabel(labels[0])

plt.ylabel(labels[1])

plt.savefig(output_file)

plt.close()

Building/running/creating report:

>>> g++ -O3 -std=c++11 bandwidth.cpp -o bandwidth

>>> ./bandwidth > report.txt

>>> python create_report.py report.txt

# image is in report.png