I'm recoding the rendering pipeline in c++ and have some trouble to make it works. I mean, it's working but not in the way i expected. To make it simple, i transform my 3D point to 2D point with a perspective projection matrix builds as follow :

My draw_edge function simply take 2 points in world space and make the calls to transform those point to screen coordinates :

void Scene::draw_edge(const geometry::Point<float,4>& p1, const geometry::Point<float,4>& p2, gui::Color c) const

{

geometry::LineSegment ls(p1.ReduceToPoint3DAsPoint(), p2.ReduceToPoint3DAsPoint());

std::optional<geometry::LineSegment> cls = camera.VisiblePart(ls);

if(cls != std::nullopt)

{

auto p1Persp = Perspective((*cls).GetBegin().ExtendedPoint4DAsPoint());

auto p2Persp = Perspective((*cls).GetEnd().ExtendedPoint4DAsPoint());

om::Vector<2,float> p1Projection2D;

om::Vector<2,float> p2Projection2D;

p1Projection2D[0] = p1Persp.At(0);

p1Projection2D[1] = p1Persp.At(1);

p2Projection2D[0] = p2Persp.At(0);

p2Projection2D[1] = p2Persp.At(1);

gui->render_line(p1Projection2D,p2Projection2D,c);

}

}

This function is use to actually transform my points by applying the perspective matrix and then divide my coordinates by 'w'.

geometry::Point<float,3> Scene::Perspective(const geometry::Point<float,4>& point) const

{

geometry::Transform perspectiveTransform = camera.GetPerspectiveProjection();

geometry::Point<float,4> pointHomogenous = perspectiveTransform.TransformTo(point);

om::Vector<3,float> normalized;

for(int i = 0; i < 3; i++)

{

normalized[i] = pointHomogenous.At(i) / pointHomogenous.At(3);

}

return geometry::Point<float,3>(normalized);

}

This function is use to build my perspective projection matrix

geometry::Transform Camera::GetPerspectiveProjection() const

{

float nearValue = position.Dist(frustum.nearPlane);

float farValue = position.Dist(frustum.farPlane);

float rightValue = nearValue / focaleDistance;

float leftValue = -nearValue / focaleDistance;

float topValue = (aspectRatio * nearValue) / focaleDistance;

float bottomValue = -(aspectRatio * nearValue) / focaleDistance;

auto perspective = geometry::Transform(nearValue, farValue, rightValue, leftValue, topValue, bottomValue);

//auto perspectiveInverse = perspective.GetMatrix().Reverse();

//perspective = geometry::Transform(perspectiveInverse);

return perspective;

}

As you can see, i have comment the inversion and that's the point of this post i'll come back to it later.

So finally, the function that build my perspective matrix :

Transform(float near, float far, float right, float left, float top, float bottom)

{

transform[0][0] = (2*near)/(right - left);

transform[0][1] = 0.0f;

transform[0][2] = (right + left)/(right - left);

transform[0][3] = 0.0f;

transform[1][0] = 0.0f;

transform[1][1] = (2*near)/(top - bottom);

transform[1][2] = (top + bottom)/(top - bottom);

transform[1][3] = 0.0f;

transform[2][0] = 0.0f;

transform[2][1] = 0.0f;

transform[2][2] = -((far + near)/(far - near));

transform[2][3] = -((2*near*far)/(far - near));

transform[3][0] = 0.0f;

transform[3][1] = 0.0f;

transform[3][2] = -1.0f;

transform[3][3] = 0.0f;

}

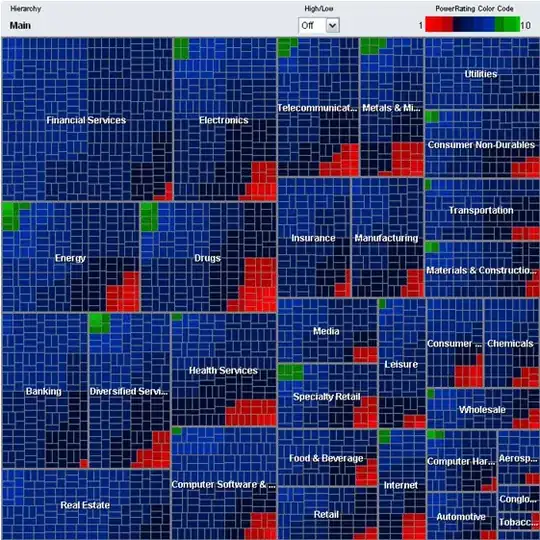

So, now that all the code needed is exposed, here is my problem. When i use the inversion of perspective projection (by uncommenting the 2 lines), the result looks correct as follow :

But if I comment my inversion, the resultat is as follow :

So my problem is that I don't understand why i need to use the inverse matrix to have a resultat that's look correct ? I mean, all document i have read about perspective projection, no one was speaking about inversing the perspective projection matrix.

Thanks for reading and don't hesitate to ask for more informations