I am facing a problem to connect to an Azure MS SQL Server 2014 database in Apache Airflow 1.10.1 using pymssql. I want to use the MsSqlHook class provided by Airflow, for the convenience to create my connection in the Airflow UI, and then create a context manager for my connection using SqlAlchemy:

@contextmanager

def mssql_session(dt_conn_id):

sqla_engine = MsSqlHook(mssql_conn_id=dt_conn_id).get_sqlalchemy_engine()

session = sessionmaker(bind=sqla_engine)()

try:

yield session

except:

session.rollback()

raise

else:

session.commit()

finally:

session.close()

But when I do that, I have this error when I run a request :

sqlalchemy.exc.InterfaceError: (pyodbc.InterfaceError) ('IM002', '[IM002] [unixODBC][Driver Manager]Data source name not found, and no default driver specified (0) (SQLDriverConnect)') (Background on this error at: http://sqlalche.me/e/rvf5)

It seems come from pyodbc whereas I want to use pymssql (and in MsSqlHook, the method get_conn uses pymssql !)

I searched in the source code of Airflow the cause. I noticed that the method get_uri from the class DbApiHook (from which is inherited MsSqlHook) builds the connection string passed to SqlAlchemy like this:

'{conn.conn_type}://{login}{host}/{conn.schema}'

But conn.conn_type is simply equal to 'mssql' whereas we need to specify the DBAPI as described here: https://docs.sqlalchemy.org/en/latest/core/engines.html#microsoft-sql-server (for example : 'mssql+pymssql://scott:tiger@hostname:port/dbname')

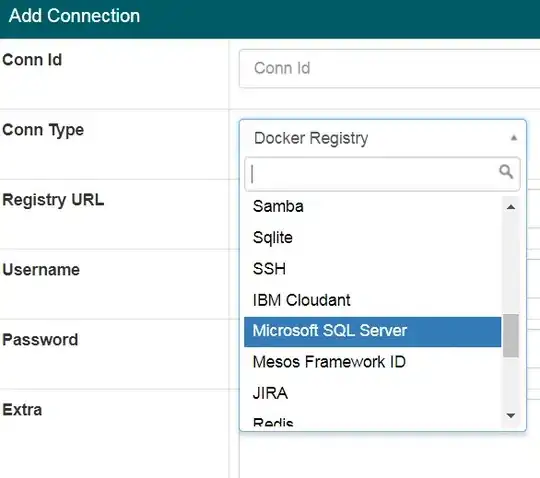

So, by default, I think it uses pyodbc. But how can I set properly the conn_type of the connection to 'mssql+pymssql' instead of 'mssql' ? In the Airflow IU, you can simply select SQL server in a dropdown list, but not set as you want :

To work around the issue, I overload the get_uri method from DbApiHook in a new class I created inherited from MsSqlHook, and in which I build my own connection string, but it's not clean at all...

Thanks for any help