The Woodbury matrix identity states that the inverse of a rank-k correction of some matrix can be computed by doing a rank-k correction to the inverse of the original matrix.

If A is a p × p full rank matrix that is rank corrected by UCV where U is p × k, C is k × k, and V is k × p, then the Woodbury identity is:

(A + UCV)^{-1} = A^{-1} - A^{-1} U (C^{-1} + V A^{-1} U)^{-1} V A^{-1}

The key is that rather than inverting a p × p matrix, you invert a k × k matrix. In many applications, we can assume k < p. Inverting A can be fast in some cases, for example if A is a diagonal matrix.

I've implemented this here, assuming A is diagonal and that C is the identity:

def woodbury(A, U, V, k):

A_inv = np.diag(1./np.diag(A)) # Fast matrix inversion of a diagonal.

B_inv = np.linalg.inv(np.eye(k) + V @ A_inv @ U)

return A_inv - (A_inv @ U @ B_inv @ V @ A_inv)

and sanity checked my implementation by verifying that

n = 100000

p = 1000

k = 100

A = np.diag(np.random.randn(p))

U = np.random.randn(p, k)

V = U.T

M = U @ V + A

M_inv = woodbury(A, U, V, k)

assert np.allclose(M @ M_inv, np.eye(p))

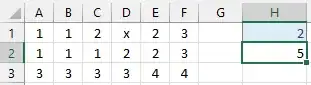

But when I actually compare this to numpy.linalg.inv, my Woodbury function is not nearly as fast as I would have expected. I would expect the time to invert to grow cubically with th dimensionality p. But my results are:

My question is: why is the Woodbury method so slow? Is it simply because I'm comparing Python code to LAPACK or is there something else going on?

EDIT: My experiments with einsum() vs. broadcasting

I implemented three versions: (1) using einsum and einsum_path, (2) using broadcasting per the accepted answer, and (3) using both. Here is my implementation using einsum, optimized using einsum_path:

def woodbury_einsum(A, U, V, k):

A_inv = np.diag(1./np.diag(A))

tmp = np.einsum('ab,bc,cd->ad',

V, A_inv, U,

optimize=['einsum_path', (1, 2), (0, 1)])

B_inv = np.linalg.inv(np.eye(k) + tmp)

tmp = np.einsum('ab,bc,cd,de,ef->af',

A_inv, U, B_inv, V, A_inv,

optimize=['einsum_path', (0, 1), (0, 1), (0, 2), (0, 1)])

return A_inv - tmp

And results are here:

So avoiding the computational cost of matrix multiplication with a diagonal matrix is faster than optimizing the ordering of matrix multiplication and memory footprint using einsum().