How do you calculate UV coordinates for points on a plane?

I have a polygon - 3 or 4 or more points - that is on a plane - that is to say, all the points are on a plane. But it can be at any angle in space.

One side of this polygon - two points - are to be mapped to two corresponding 2D points in a texture - I know these two points in advance. I also know the x and y scale for the texture, and that no points fall outside the texture extent or other 'edge cases'.

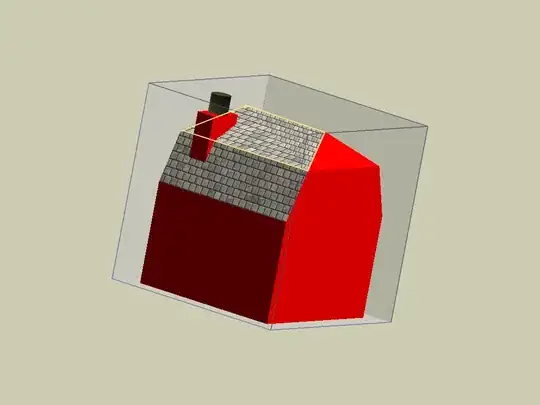

Here's an image where the up-most textured quad is distorted:

I outlined a bad quad in yellow. Imagine that I know the UV coordinates of those two bottom-most corners on that quad, and want to calculate the proper UV coordinates of the other two points...

How do you calculate the UV coordinates of all the other points in the plane relative to these two points?

Imagine my texture is a piece of paper in real life, and I want to texture your (flat) car door. I place two dots on my paper, which I line up with two dots on your car door. How do I calculate where the other locations on the car door are under the paper?

Can you use trilateration? What would the pseudo-code look like for two known points in 2D space?

Success using brainjam's code:

def set_texture(self,texture,a_ofs,a,b):

self.texture = texture

self.colour = (1,1,1)

self.texture_coords = tx = []

A, B = self.m[a_ofs:a_ofs+2]

for P in self.m:

if P == A:

tx.append(a)

elif P == B:

tx.append(b)

else:

scale = P.distance(A)/B.distance(A)

theta = (P-A).dot((B-A)/(P.distance(A)*B.distance(A)))

theta = math.acos(theta)

x, y = b[0]-a[0], b[1]-a[1]

x, y = x*math.cos(theta) - y*math.sin(theta), \

x*math.sin(theta) + y*math.cos(theta)

x, y = a[0]+ x*scale, a[1]+ y*scale

tx.append((x,y))