Consider the following two different functions ComputeA and ComnputeB:

using System;

using System.Diagnostics;

namespace BenchmarkLoop

{

class Program

{

private static double[] _dataRow;

private static double[] _dataCol;

public static double ComputeA(double[] col, double[] row)

{

var rIdx = 0;

var value = 0.0;

for (var i = 0; i < col.Length; ++i)

{

for (var cIdx = 0; cIdx < col.Length; ++cIdx, ++rIdx)

value += col[cIdx] * row[rIdx];

}

return value;

}

public static double ComputeB(double[] col, double[] row)

{

var rIdx = 0;

var value = 0.0;

for (var i = 0; i < col.Length; ++i)

{

value = 0.0;

for (var cIdx = 0; cIdx < col.Length; ++cIdx, ++rIdx)

value += col[cIdx] * row[rIdx];

}

return value;

}

public static double ComputeC(double[] col, double[] row)

{

var rIdx = 0;

var value = 0.0;

for (var i = 0; i < col.Length; ++i)

{

var tmp = 0.0;

for (var cIdx = 0; cIdx < col.Length; ++cIdx, ++rIdx)

tmp += col[cIdx] * row[rIdx];

value += tmp;

}

return value;

}

static void Main(string[] args)

{

_dataRow = new double[2500];

_dataCol = new double[50];

var random = new Random();

for (var i = 0; i < _dataRow.Length; i++)

_dataRow[i] = random.NextDouble();

for (var i = 0; i < _dataCol.Length; i++)

_dataCol[i] = random.NextDouble();

var nRuns = 1000000;

var stopwatch = new Stopwatch();

stopwatch.Start();

for (var i = 0; i < nRuns; i++)

ComputeA(_dataCol, _dataRow);

stopwatch.Stop();

var t0 = stopwatch.ElapsedMilliseconds;

stopwatch.Reset();

stopwatch.Start();

for (int i = 0; i < nRuns; i++)

ComputeC(_dataCol, _dataRow);

stopwatch.Stop();

var t1 = stopwatch.ElapsedMilliseconds;

Console.WriteLine($"Time ComputeA: {t0} - Time ComputeC: {t1}");

Console.ReadKey();

}

}

}

They differ only in the "reset" of variable value before each call to the inner loop. I've run several different kind of benchmarks, all with "Optimized code" enabled, with 32bit and 64bit, and different size of the data arrays. Always, ComputeB is around 25% faster. I can reproduce these results also with BenchmarkDotNet. But I cannot explain them. Any idea? I also checked the resulting assembler code with Intel VTune Amplifier 2019: For both functions the JIT result is exactly the same, plus the extra line to reset value:

So, on assembler level there is no magic going on which can make the code faster. Is there any other possible explanation for this effect? And how to verify it?

So, on assembler level there is no magic going on which can make the code faster. Is there any other possible explanation for this effect? And how to verify it?

And here are the results with BenchmarkDotNet (parameter Nis size of _dataCol, _dataRow is always of size N^2):

And the results for comparing ComputeA and ComputeC:

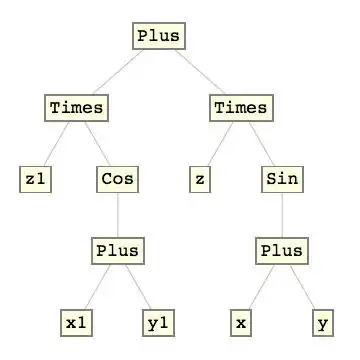

JIT-Assembly for ComputeA (left) and ComputeC (right):

The diff is quite small: in block 2, the variable tmp is set to 0 (stored in register xmml), and in block 6, tmp is added to the returning result value. So, overall, no surprise. Just the runtime is magic ;)