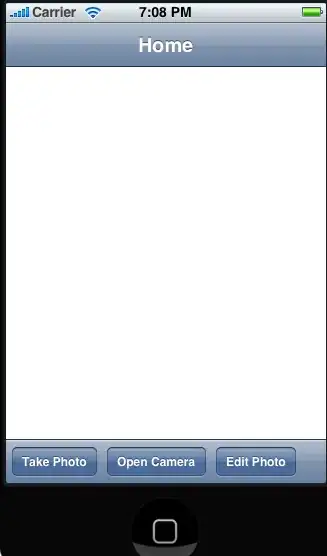

I want to use the JohnSnowLabs pretrained spell check module in my Zeppelin notebook. As mentioned here I have added com.johnsnowlabs.nlp:spark-nlp_2.11:1.7.3 to the Zeppelin dependency section as shown below:

However, when I try to run the following simple code

import com.johnsnowlabs.nlp.DocumentAssembler

import com.johnsnowlabs.nlp.annotator.NorvigSweetingModel

import com.johnsnowlabs.nlp.annotators.Tokenizer

import org.apache.spark.ml.Pipeline

import com.johnsnowlabs.nlp.Finisher

val df = Seq("tiolt cde", "eefg efa efb").toDF("names")

val nlpPipeline = new Pipeline().setStages(Array(

new DocumentAssembler().setInputCol("names").setOutputCol("document"),

new Tokenizer().setInputCols("document").setOutputCol("tokens"),

NorvigSweetingModel.pretrained().setInputCols("tokens").setOutputCol("corrected"),

new Finisher().setInputCols("corrected")

))

df.transform(df => nlpPipeline.fit(df).transform(df)).show(false)

it gives an error as follows:

org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 4 times, most recent failure: Lost task 0.3 in stage 0.0 (TID 3, xxx.xxx.xxx.xxx, executor 0): java.io.FileNotFoundException: File file:/root/cache_pretrained/spell_fast_en_1.6.2_2_1534781328404/metadata/part-00000 does not exist

at org.apache.hadoop.fs.RawLocalFileSystem.deprecatedGetFileStatus(RawLocalFileSystem.java:611)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileLinkStatusInternal(RawLocalFileSystem.java:824)

at org.apache.hadoop.fs.RawLocalFileSystem.getFileStatus(RawLocalFileSystem.java:601)

at org.apache.hadoop.fs.FilterFileSystem.getFileStatus(FilterFileSystem.java:421)

at org.apache.hadoop.fs.ChecksumFileSystem$ChecksumFSInputChecker.<init>(ChecksumFileSystem.java:142)

at org.apache.hadoop.fs.ChecksumFileSystem.open(ChecksumFileSystem.java:346)

at org.apache.hadoop.fs.FileSystem.open(FileSystem.java:769)

at org.apache.hadoop.mapred.LineRecordReader.<init>(LineRecordReader.java:109)

at org.apache.hadoop.mapred.TextInputFormat.getRecordReader(TextInputFormat.java:67)

at org.apache.spark.rdd.HadoopRDD$$anon$1.liftedTree1$1(HadoopRDD.scala:257)

at org.apache.spark.rdd.HadoopRDD$$anon$1.<init>(HadoopRDD.scala:256)

at org.apache.spark.rdd.HadoopRDD.compute(HadoopRDD.scala:214)

at org.apache.spark.rdd.HadoopRDD.compute(HadoopRDD.scala:94)

at org.apache.spark.rdd.RDD.computeOrReadCheckpoint(RDD.scala:324)

...

How can I add this JohnSnowLabs spelling check pretrained model in Zeppelin? The above code works when directly ran on the Spark-shell.