I have an SDL2 app with an OpenGL window, and it is well behaved: When it runs, the app gets synchronized with my 60Hz display, and I see 12% CPU Usage for the app.

So far so good. But when I add 3D picking by reading a single (!) depth value from the depth buffer (after drawing), the following happens:

- FPS still at 60

- CPU usage for the main thread goes to 100%

If I don't do the glReadPixels, the CPU use drops back to 12% again. Why does reading a single value from the depth buffer cause the CPU to burn all cycles?

My window is created with:

SDL_GL_SetAttribute(SDL_GL_CONTEXT_MAJOR_VERSION, 3);

SDL_GL_SetAttribute(SDL_GL_CONTEXT_MINOR_VERSION, 2);

SDL_GL_SetAttribute(SDL_GL_CONTEXT_PROFILE_MASK, SDL_GL_CONTEXT_PROFILE_CORE);

SDL_GL_SetAttribute( SDL_GL_DOUBLEBUFFER, 1 );

SDL_GL_SetAttribute( SDL_GL_MULTISAMPLEBUFFERS, use_aa ? 1 : 0 );

SDL_GL_SetAttribute( SDL_GL_MULTISAMPLESAMPLES, use_aa ? 4 : 0 );

SDL_GL_SetAttribute(SDL_GL_FRAMEBUFFER_SRGB_CAPABLE, 1);

SDL_GL_SetAttribute(SDL_GL_DEPTH_SIZE, 24);

window = SDL_CreateWindow

(

"Fragger",

SDL_WINDOWPOS_UNDEFINED,

SDL_WINDOWPOS_UNDEFINED,

fbw, fbh,

SDL_WINDOW_OPENGL | SDL_WINDOW_RESIZABLE | SDL_WINDOW_ALLOW_HIGHDPI

);

My drawing is concluded with:

SDL_GL_SwapWindow( window );

My depth read is performed with:

float depth;

glReadPixels( scrx, scry, 1, 1, GL_DEPTH_COMPONENT, GL_FLOAT, &depth );

My display sync is configured using:

int rv = SDL_GL_SetSwapInterval( -1 );

if ( rv < 0 )

{

LOGI( "Late swap tearing not available. Using hard v-sync with display." );

rv = SDL_GL_SetSwapInterval( 1 );

if ( rv < 0 ) LOGE( "SDL_GL_SetSwapInterval() failed." );

}

else

{

LOGI( "Can use late vsync swap." );

}

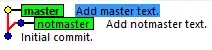

Investigations with 'perf' shows that the cycles are burnt up by nVidia's driver, doing relentless system calls, one of which is sys_clock_gettime() as can be seen below:

I've tried some variations by reading GL_BACK or GL_FRONT, with same result. I also tried reading just before and just after the window swap. But the CPU usage is always at a 100% level.

- Platform: Ubuntu 18.04.1

- SDL: version 2.0.8

- CPU: Intel Haswell

- GPU: nVidia GTX750Ti

- GL_VERSION: 3.2.0 NVIDIA 390.87

UPDATE

On Intel HD Graphics, the CPU does not spinlock. The glReadPixels is still slow, but the CPU has a low duty cycle (1%) or so, compared to a fully 100% loaded CPU on nVidia drivers.

I also tried asynchronous pixel reads via PBO (Pixel Buffer Objects) but that work only for RGBA values, never for DEPTH values.