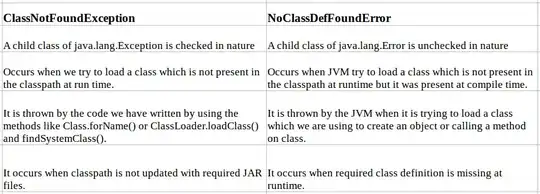

I have the following method for rotating images (python):

> def rotateImage(image, angle):

> row,col = image.shape[0:2]

> center=tuple(np.array([row,col])/2)

> rot_mat = cv2.getRotationMatrix2D(center,angle,1.0)

> new_image = cv2.warpAffine(image, rot_mat, (col,row))

> return new_image

This is the rotated (15 degree angle) picture that OpenCV returns:

This is the image if I rotate the image around the center in photoshop:

This is the image if I rotate the image around the center in photoshop:

This are the two roated images superimposed:

Obviously there is a difference. I'm pretty sure Photoshop did it correctly (or better - I did it correctly in photoshop), what am I missing?