Mean squared error is a popular cost function used in machine learning:

(1/n) * sum(y - pred)**2

Basically the order of subtraction terms doesn't matter as the whole expression is squared.

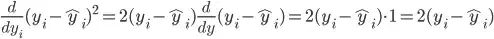

But if we differentiate this function, it will no longer be squared:

2 * (y - pred)

Would the order make a difference for a neural network?

In most cases reversing the order of the terms y and pred would change the sign of the result. As we use the result to compute the slope of the weight - would it influence the way the neural network converges?