Learning Rate Scaling for Dummies

I've always found the heuristics which seem to vary somewhere between scale with the square root of the batch size and the batch size to be a bit hand-wavy and fluffy, as is often the case in Deep Learning. Hence I devised my own theoretical framework to answer this question.

EDIT: Since the posting of this answer, my paper on this topic has been published at the journal of machine learning (https://www.jmlr.org/papers/volume23/20-1258/20-1258.pdf). I want to thank the stackoverflow community for believing in my ideas, engaging with and probing me, at a time where the research community dismissed me out of hand.

Learning Rate is a function of the Largest Eigenvalue

Let me start with two small sub-questions, which answer the main question

- Are there any cases where we can a priori know the optimal learning rate?

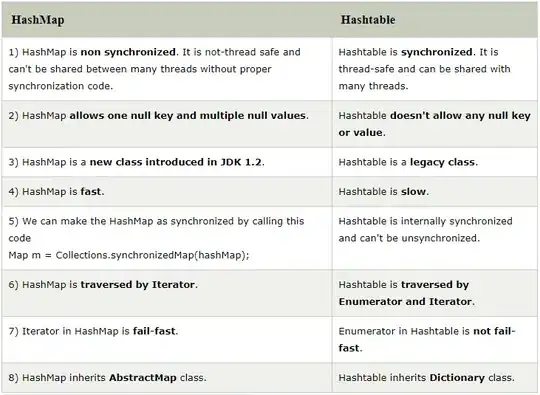

Yes, for the convex quadratic, the optimal learning rate is given as 2/(λ+μ), where λ,μ represent the largest and smallest eigenvalues of the Hessian (Hessian = the second derivative of the loss ∇∇L, which is a matrix) respectively.

- How do we expect these eigenvalues (which represent how much the loss changes along a infinitesimal move in the direction of the eigenvectors) to change as a function of batch size?

This is actually a little more tricky to answer (it is what I made the theory for in the first place), but it goes something like this.

Let us imagine that we have all the data and that would give us the full Hessian H. But now instead we only sub-sample this Hessian so we use a batch Hessian B. We can simply re-write B=H+(B-H)=H+E. Where E is now some error or fluctuation matrix.

Under some technical assumptions on the nature of the elements of E, we can assume this fluctations to be a zero mean random matrix and so the Batch Hessian becomes a fixed matrix + a random matrix.

For this model, the change in eigenvalues (which determines how large the learning rate can be) is known. In my paper there is another more fancy model, but the answer is more or less the same.

What actually happens? Experiments and Scaling Rules

I attach a plot of what happens in the case that the largest eigenvalue from the full data matrix is far outside that of the noise matrix (usually the case). As we increase the mini-batch size, the size of the noise matrix decreases and so the largest eigenvalue also decreases in size, hence larger learning rates can be used. This effect is initially proportional and continues to be approximately proportional until a threshold after which no appreciable decrease happens.

How well does this hold in practice? The answer as shown below in my plot on the VGG-16 without batch norm (see paper for batch normalisation and resnets), is very well.

I would hasten to add that for adaptive order methods, if you use a small numerical stability constant (epsilon for Adam) the argument is a little different because you have an interplay of the eigenvalues, the estimated eigenvalues and your stability constant! so you actually end up getting a square root rule up till a threshold. Quite why nobody is discussing this or has published this result is honestly a little beyond me.

But if you want my practical advice, stick with SGD and just go proportional to the increase in batch size if your batch size is small and then don't increase it beyond a certain point.