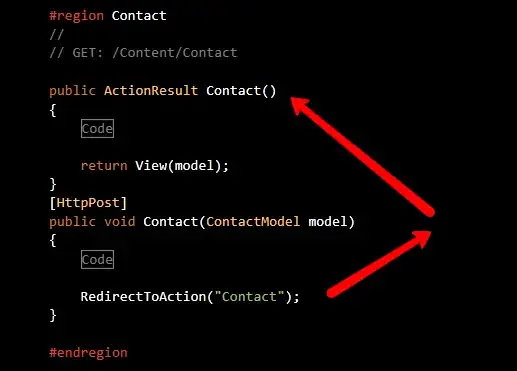

I am having a big problem when implementing a Variational Autoencoder, it being that all images end up looking like this:

The training set is CIFAR10 and the expected outcome is to manage to construct similar images. While the results seem to have the feature map correctly predicted, I do not understand why the outcome is like this after 50 epochs.

I've used both fewer and higher number of filters, currently at 128. Can this outcome be from the Network Architecture ? Or the few number of epochs ?

The loss function used is MSE and the optimizer RMSPROP.

I've also tried implementing this architecture: https://github.com/chaitanya100100/VAE-for-Image-Generation/blob/master/src/cifar10_train.py having similar results, if not worse.

I am very confused to what it might be the problem here. The method of saving is using matplotlib pyplot to save the predictions and its real counterparts.