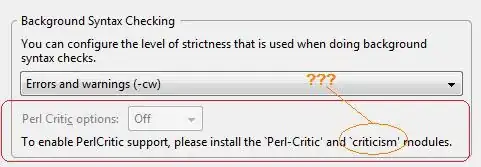

For two images, one in a sunny weather and another in rainy weather with virtually no difference in the content and objects except the weather, Is there any metric to say that they are highly similar?

- 91

- 7

-

I think it would be a bit better if you included example images... – Ash Oct 19 '18 at 19:56

-

Added few samples.. – code_Assasin Oct 20 '18 at 10:14

-

1These appear to be computer-generated images, correct? Are all your images like that? – Mark Setchell Oct 20 '18 at 10:36

-

Not exactly.. I gave this as an example.. But the point being we a need a similarity metric that's content-based and robust to weather conditions.. – code_Assasin Oct 20 '18 at 10:59

3 Answers

NCC and SSIM are likely the best two to use when lighting is different, which can cause brightness/contrast. The other metrics do not do any normalization of the brightness/contrast

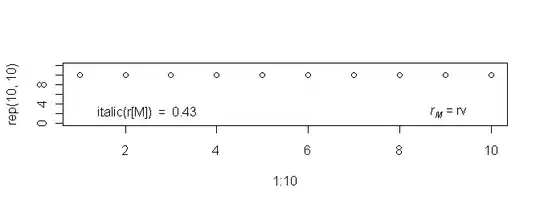

SSIM gives me 0.763003 for the sunny vs rainy and 0.236967 for the sunny vs other. This is a separation ratio of 3.22. NCC gives me 0.831495 and 0.220601, respectively. This is a separation ratio of 3.77. So slightly better. See Mark Setchell's answer for commands for those.

One other way would be to do edge detection first on a grayscale equalized image. This mitigates agains brightness/contrast changes and even color shifts.

Here is that approach using an 8-directional Sobel operator in Imagemagick.

convert bright.png -colorspace gray -equalize \

-define convolve:scale='!' \

-define morphology:compose=Lighten \

-morphology Convolve 'Sobel:>' bright_sobel.png

convert dull.png -colorspace gray -equalize \

-define convolve:scale='!' \

-define morphology:compose=Lighten \

-morphology Convolve 'Sobel:>' dull_sobel.png

convert other.png -colorspace gray -equalize \

-define convolve:scale='!' \

-define morphology:compose=Lighten \

-morphology Convolve 'Sobel:>' other_sobel.png

compare -metric ncc bright_sobel.png dull_sobel.png null:

0.688626

compare -metric ncc bright_sobel.png other_sobel.png null:

0.0756445

This is a separation ratio of 9.1. So quite a bit better.

With edge detection, you can likely use other metrics as the normalization has been done already by the equalize and grayscale operations. But NCC may still be the best here.

See https://imagemagick.org/Usage/convolve/#sobel

ADDITION:

If one adds equalization to the original images, then the non-edge NCC results get better than at the top of this post:

convert bright.png -equalize bright_eq.png

convert dull.png -equalize dull_eq.png

convert other.png -equalize other_eq.png

compare -metric NCC bright_eq.png dull_eq.png null:

0.861087

compare -metric NCC bright_eq.png other_eq.png null:

0.204296

This gives a separation ratio of 4.21, which is slightly better than the 3.77 above without the equalization.

ADDITION2:

Here is another way, which uses my script, redist, that attempts to change the image statistics to a specific mean and standard deviation. (see http://www.fmwconcepts.com/imagemagick/index.php)

I apply it to all images with the same arguments to normalize to the same mean and std and then do a canny edge extraction before doing the compare. The redist is similar to equalize, but uses a Gaussian distribution rather than a flat or constant one. An alternate to redist would be a local area histogram equalization (lahe) or contrast limited adaptive histogram equalization (clahe). See https://en.wikipedia.org/wiki/Adaptive_histogram_equalization.

The numbers in the commands below are normalized (in range 0 to nominally 100 %) and represent the mean, the one-sigma offset on the left side of the peak, the one-sigma offset on the right side of the peak, where sigma is like the standard deviation.

redist 50,50,50 bright.png bright_rdist.png

redist 50,50,50 dull.png dull_rdist.png

redist 50,50,50 other.png other_rdist.png

convert bright_rdist.png -canny 0x1+10%+30% bright_rdist_canny.png

convert dull_rdist.png -canny 0x1+10%+30% dull_rdist_canny.png

convert other_rdist.png -canny 0x1+10%+30% other_rdist_canny.png

compare -metric ncc bright_rdist_canny.png dull_rdist_canny.png null:

0.345919

compare -metric ncc bright_rdist_canny.png other_rdist_canny.png null:

0.0323863

This gives a separation ratio of 10.68

- 46,825

- 10

- 62

- 80

-

Good insights! I was thinking of edge detection first too, but wondered about edges disappearing in the rain. – Mark Setchell Oct 20 '18 at 19:20

-

I tried canny edges first, but that was just what happened, even with equalization. – fmw42 Oct 20 '18 at 19:26

-

A Normalised Cross Correlation seems pretty effective at spotting the similarity. I just used ImageMagick from the command-line in Terminal, but all image processing packages should have something offering similar functionality.

Let's call your three images rainy.png, sunny.png and other.png. Then, the NCC is 1 when the images are identical and 0 when they have nothing in common.

So, compare rainy.png with sunny.png and they are 83% similar:

convert -metric NCC sunny.png rainy.png -compare -format "%[distortion]" info:

0.831495

Now compare rainy.png with other.png and they are 21% similar:

convert -metric NCC rainy.png other.png -compare -format "%[distortion]" info:

0.214111

And finally, compare sunny.png with other.png and they are 22% similar:

convert -metric NCC sunny.png other.png -compare -format "%[distortion]" info:

0.22060

ImageMagick also offers other metrics, such as Mean Absolute Error, Structural Similarity and so on. To get a list of options, use:

identify -list metric

Sample Output

- AE

- DSSIM

- Fuzz

- MAE

- MEPP

- MSE

- NCC

- PAE

- PHASH

- PSNR

- RMSE

- SSIM

and choose the one you want, then use -metric SSIM instead of -metric NCC if you want Structural Similarity rather than Normalised Cross Correlation.

- 191,897

- 31

- 273

- 432

-

No mutual information in Image Magick? I think that would be the best choice. But all of these require otherwise identical images—a shift of the camera, as is likely when the image is taken on another day, even a small shift, would render these comparisons useless. – Cris Luengo Oct 20 '18 at 22:12

-

@CrisLuengo Sorry, what do you mean by *"mutual information"*? I am unfamiliar with the term. AFAIK, the images are computer-generated, so they likely have the same angle down to the last pixel. – Mark Setchell Oct 20 '18 at 22:18

-

It measures image similarity, using a joint histogram. The more compact the joint histogram, the more similar the two images. If they are unrelated, then there is no relationship between intensities in the two images, and you see this in the histogram. It is sort of a correlation, but not caring about the order of the intensities. It is used a lot in medical imaging, for example when registering an MRI and a CAT scan together. Different organs show up with different intensities in both modalities, which makes correlations and the like useless. – Cris Luengo Oct 20 '18 at 23:09

-

@CrisLuengo Ok, many thanks for the explanation. I shall do some Googling and learn something :-) – Mark Setchell Oct 21 '18 at 08:36

-

@CrisLuengo I had a similar query.. With small linear displacements also methods like SSIM etc will have lower scores and that's not desirable – code_Assasin Oct 23 '18 at 04:19

-

1@code_Assasin: yes, if the actual application uses real photographs, you will need to include some form of registration to align the two images you want to compare. – Cris Luengo Oct 23 '18 at 04:37

I've used intermediate layers from CNNs to do that sort of robust comparison in some projects in the past. Basically, you take a CNN that has been trained for some task like image segmentation, and then try to identify layers or combinations of layers that offer a good balance of geometric/photometric features for your matching. Then at test time, you pass the images in the CNN, and compare those features with for example, a Euclidean distance. My images were similar to yours, and I needed something that was fast, so at that time Enet was a good choice for me (well, there are better choices now). I ended up using a combination of features from its 21st and 5th layers that ended up working well in practice. However, if your images are from a sequence where you can exploit temporal information, I strongly recommend that you take a look at SeqSLAM (sorry, couldn't find a non-paywall version. The interesting thing with this is that it doesn't require any CNNs, is real-time, and if memory serves, uses just very simple pyramidal intensity based comparisons for the matching, similar to SPP), as well as this paper, which improves SeqSLAM with layers from CNNs.

- 4,611

- 6

- 27

- 41