I am trying to add this dependency to the spark 2 interpreter in zeppelin

https://mvnrepository.com/artifact/org.apache.spark/spark-sql_2.11/2.2.0

However, after adding the dependency, I get a null pointer exception when running any code.

I am trying to add this dependency to the spark 2 interpreter in zeppelin

https://mvnrepository.com/artifact/org.apache.spark/spark-sql_2.11/2.2.0

However, after adding the dependency, I get a null pointer exception when running any code.

You don't need to add spark-sql, it is already in spark interpreter.

Just add %spark.sql at the top of your notebook to provide an SQL environment

https://zeppelin.apache.org/docs/0.8.0/interpreter/spark.html#overview

I solved the problem. I was defining a class in Scala. The methods to_date & date_format were being used inside the class but my import statements were outside the class. All I had to do was place the import statements inside the class brackets and it worked fine.

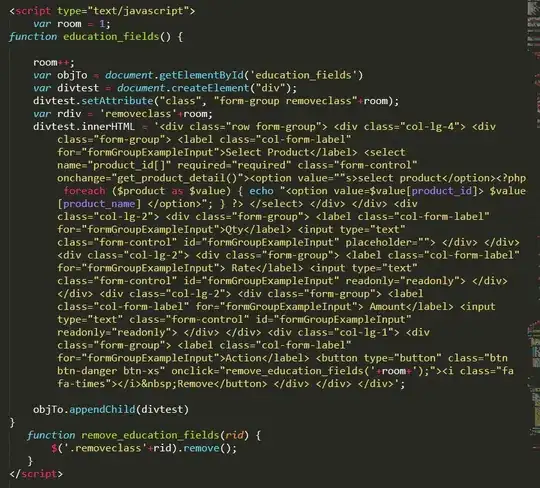

case class HelperClass(){

import org.apache.spark.sql.functions._

import org.apache.spark.sql.types._

var fire_services_customDF = fire_servicesDF

var data=fire_servicesDF

def SetDatatypes() : Unit = {

data=fire_services_customDF.withColumn("CallDateTmp",date_format(to_date(col("CallDate"), "MM/dd/yy"), "yyyy-MM-dd").cast("timestamp"))

}

def PrintSchema() : Unit= {

data.printSchema

}

}