I have a flow QueryDatabaseTable->ConvertRecord->PutElasticseachHttpRecord What I am trying to do is fetching the full data from MySQL database and feeding it into Elasticsearch to perform analytics on it using Kibana. However, my data has duplicate columns like below:(Highlighted in black are the only repeating value)

ID,Machine Name,value1,Value2,Date

1, abc, 10, 34, 2018-09-27 10:40:10

2, abc, 10, 34, 2018-09-27 10:41:14

3,abc, 10, 34, 2018-09-27 10:42:19

4, xyz, 12, 45, 2018-09-27 10:45:19

So In my table ID is primary key and Timestamp fields keep updating. What I want to achieve is to fetch only one record for particular Machine Name. Example below show what output table I want:

ID,Machine Name,value1,Value2,Date

1, abc, 10, 34, 2018-09-27 10:40:10

4, xyz, 12, 45, 2018-09-27 10:45:19

How can I achieve this in NiFi? The objective is to drop/delete the duplicate columns. If it's possible please tell me which processor to use and what configs to set?

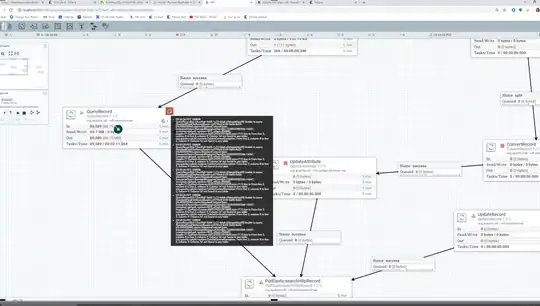

I am getting following error in QueryRecord processor:

Any suggestion is much appreciated. Thank You