Intents represent a user's input (typically what they say), not what you are "doing".

Although you can redirect from a webhook (such as your I2 handler) to an event (which might be picked up by I1), you can't do it "after" the webhook replies. This would replace the result from the webhook. (And, while possible, it is usually not the best approach to a problem.)

You also cannot do this from the Dialogflow configuration. It must be done through a webhook.

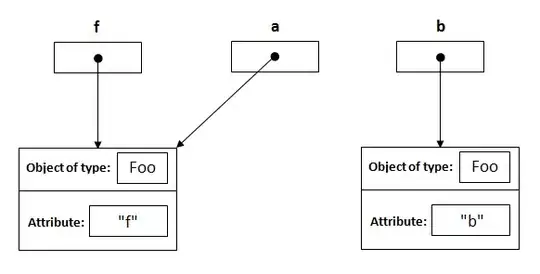

Update based on your diagram.

Your diagram illustrates why we encourage people to think about conversations and what the user says rather than "what do we do". Conversation diagrams aren't quite the same as flow charts. Let me restructure that as a few scripts (which we will annotate later).

Script 1:

User: Talk to number or letter

Agent: Welcome! Would you like to hear a number or letter?

User: How about a number

Agent: 1 is the loneliest number. Would you like another?

User: Yes

Agent: 2 is the only even prime. Care for another?

User: No

Agent: Ok, would you like to hear a number or letter?

Script 2:

User: Talk to number or letter

Agent: Welcome! Would you like to hear a number or a letter?

User: Number, please

Agent: 42 is the answer Another one, so to speak?

User: sure

Agent: 21 is blackjack. Care for another?

User: nope

Agent: Ok, care for a letter or a number?

Script 3:

User: Talk to number or letter

Agent: Howdy! Would you like a letter or a number?

User: Let's go for a number

Agent: A googol is 10 to the 100th power. Ready for another?

User: How about a letter this time

Agent: The letter "A" is one of only two letters that are also words. Want another?

There are a few points to learn from this:

- People respond in many different ways when asked a question. Intents use many sample phrases, plus some ML algorithms, to match what the user says to how they can reply.

- In real conversations, users can take the conversation in any direction. So although we've asked a yes or no question, they may try to take the conversation in a whole different direction instead.

- How we reply depends on two things:

- What state we were in

- What the user says

- A consequence of point (1) above is that we should keep track of the user's state to determine what we say, so the new state becomes part of the reply, even if the user doesn't see that.

With that, let's add a little more information to see what Intent would get matched and then what our webhook would do - both in terms of state set and the reply sent.

Script 1:

User: Talk to number or letter

Match: intent.welcome

Logic: Set replyState to "prompt"

Pick a response for the current replyState ("prompt")

and the intent that was matched ("intent.welcome")

Agent: Welcome! Would you like to hear a number or letter?

User: How about a number

Match: intent.number

Logic: Set replyState to "number"

Pick a response for the current replyState ("number")

Agent: 1 is the loneliest number. Would you like another?

User: Yes

Match: intent.yes

Logic: Pick a response for the current replyState ("number")

Agent: 2 is the only even prime. Care for another?

User: No

Match: intent.no

Logic: Set replyState to "prompt"

Pick a response for the current replyState ("prompt")

and the intent that was matched (not "intent.welcome")

Agent: Ok, would you like to hear a number or letter?

With this, we can see that our replies are based on a combination of the current state and the user's intent. (Our state could be more complex, to keep track of what the user has heard, how many times they've visited, etc. This is very simplified.)

We also see that "yes" doesn't change the state. It doesn't need to.

If we look at script 2, we'll see it plays out identically:

User: Talk to number or letter

Match: intent.welcome

Logic: Set replyState to "prompt"

Pick a response for the current replyState ("prompt")

and the intent that was matched ("intent.welcome")

Agent: Welcome! Would you like to hear a number or a letter?

User: Number, please

Match: intent.number

Logic: Set replyState to "number"

Pick a response for the current replyState ("number")

Agent: 42 is the answer Another one, so to speak?

User: sure

Match: intent.yes

Logic: Pick a response for the current replyState ("number")

Agent: 21 is blackjack. Care for another?

User: nope

Match: intent.no

Logic: Set replyState to "prompt"

Pick a response for the current replyState ("prompt")

and the intent that was matched (not "intent.welcome")

Agent: Ok, care for a letter or a number?

In fact, if you look at the "Match" and "Logic" parts, they are identical conversations as far as the program is concerned. The only difference is the exact words the user used and the exact phrases we replied with. They are, fundamentally, the exact same conversation.

How does this play out in script 3?

User: Talk to number or letter

Match: intent.welcome

Logic: Set replyState to "prompt"

Pick a response for the current replyState ("prompt")

and the intent that was matched ("intent.welcome")

Agent: Howdy! Would you like a letter or a number?

User: Let's go for a number

Match: intent.number

Logic: Set replyState to "number"

Pick a response for the current replyState ("number")

Agent: A googol is 10 to the 100th power. Ready for another?

User: How about a letter this time

Match: intent.letter

Logic: Set replyState to "letter"

Pick a response for the current replyState ("letter")

Agent: The letter "A" is one of only two letters that are also words. Want another?

Here, the user has suddenly requested we jump to an entirely different state. But that isn't a problem - Dialogflow just saw this the same as if they requested that state from the prompting question and the handler for that reacts the same way.

So instead of having to build in many followup intents, we aim to capture what the user is saying, and then use our webhook to change the state based on that.