Consider the following loop:

.loop:

add rsi, STRIDE

mov eax, dword [rsi]

dec ebp

jg .loop

where STRIDE is some non-negative integer and rsi contains a pointer to a buffer defined in the bss section. This loop is the only loop in the code. That is, it's not being initialized or touched before the loop. On Linux, all of the 4K virtual pages of the buffer will be mapped on-demand to the same physical page.

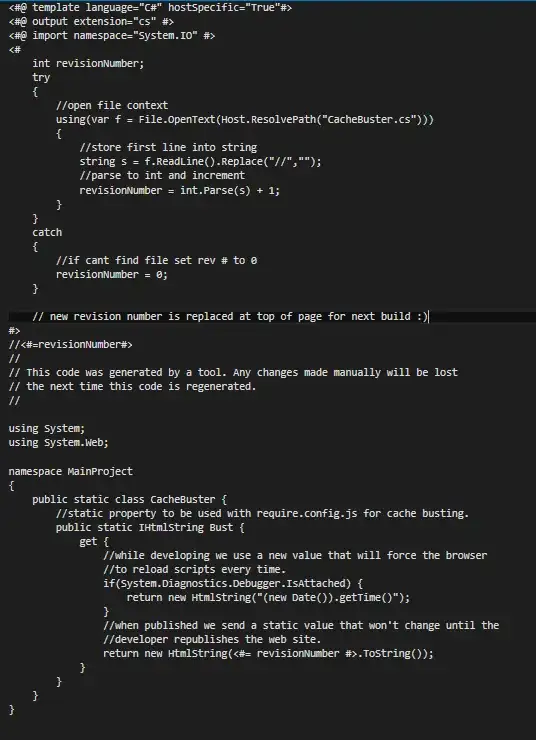

I've ran this code for all possible strides in the range 0-8192. The measured number of minor and major page faults is exactly 1 and 0, respectively, per page accessed. I've also measured all of the following performance events on Haswell for all of the strides in that range.

DTLB_LOAD_MISSES.MISS_CAUSES_A_WALK: Misses in all TLB levels that cause a page walk of any page size.

DTLB_LOAD_MISSES.WALK_COMPLETED_4K: Completed page walks due to demand load misses that caused 4K page walks in any TLB levels.

DTLB_LOAD_MISSES.WALK_COMPLETED_2M_4M: Completed page walks due to demand load misses that caused 2M/4M page walks in any TLB levels.

DTLB_LOAD_MISSES.WALK_COMPLETED_1G: Load miss in all TLB levels causes a page walk that completes. (1G).

DTLB_LOAD_MISSES.WALK_COMPLETED: Completed page walks in any TLB of any page size due to demand load misses

The two counters for the the hugepages are all zero for all the strides. The other three counters are interesting as the following graph shows.

For most strides, the MISS_CAUSES_A_WALK event occurs 5 times per page accessed and the WALK_COMPLETED_4K and WALK_COMPLETED events each occurs 4 times per page accessed. This means that all the completed page walks are for 4K pages. However, there is a fifth page walk that does not get completed. Why are there so many page walks per page? What is causing these page walks? Perhaps when a page walk triggers a page fault, after handling the fault, there will be another page walk, so this might be counted as two completed page walks. But how come there are 4 completed page walks and one apparently canceled walk? Note that there is a single page walker on Haswell (compared to two on Broadwell).

I realize there is a TLB prefetcher that appears to only be capable of prefetching the next page as discussed in this thread. According to that thread, the prefetcher walks do not appear to be counted as MISS_CAUSES_A_WALK or WALK_COMPLETED_4K events, which I agree with.

These seems to be two reasons for these high event counts: (1) a page fault causes the instruction to be re-executed, which causes a second page walk for the same page, and (2) multiple concurrent accesses that miss in the TLBs. Otherwise, by allocating memory with MAP_POPULATE and adding an LFENCE instruction after the load instruction, one MISS_CAUSES_A_WALK event and one WALK_COMPLETED_4K event occur per page. Without LFENCE, the counts are a little larger per page.

I tried with having each load accesses an invalid memory location. In this case, the page fault handler raises a SIGSEGV signal, which I handle to allow the program to continue executing. With the LFENCE instruction, I get two MISS_CAUSES_A_WALK events and two WALK_COMPLETED_4K events per access. Without LFENCE, the counts are a little larger per access.

I've also tried with using a prefetching instruction instead of a demand load in the loop. The results for the page fault case are the same as the invalid memory location case (which makes sense because the prefetch fails in both cases): one MISS_CAUSES_A_WALK event and one WALK_COMPLETED_4K event per prefetch. Otherwise, if the prefetch is to a location with a valid in-memory translation, one MISS_CAUSES_A_WALK event and one WALK_COMPLETED_4K event occur per page. Without LFENCE, the counts are a little larger per page.

All experiments were run on the same core. The number of TLB shootdown interrupts that have occurred on that core is nearly zero, so they don't have an impact on the results. I could not find an easy way to measure the number of TLB evictions on the core by the OS, but I don't think this is a relevant factor.

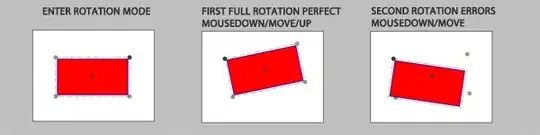

The Spike

Also as the graph above shows, there is a special pattern for small strides. In addition, there is a very weird pattern (spike) around stride 220. I was able to reproduce these patterns many times. The following graph zooms in on that weird pattern so you can see it clearly. I think the reason for this pattern is OS activity and not the way the performance events work or some microarchitectural effect, but I'm not sure.

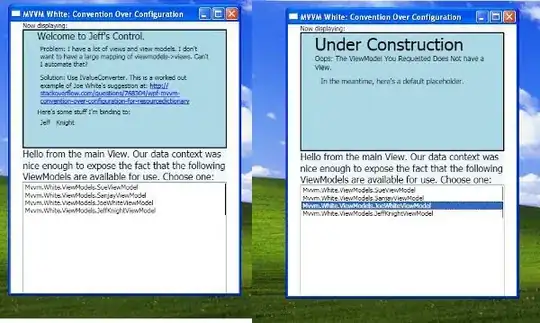

The Impact of Loop Unrolling

@BeeOnRope suggested to place LFENCE in the loop and unroll it zero or more times to better understand the effect of speculative, out-of-order execution on the event counts. The following graphs show the results. Each line represents a specific load stride when the loop is unrolled 0-63 times (1-64 add/load instruction pairs in a single iteration). The y-axis is normalized per page. The number of pages accessed is the same as the number of minor page faults.

I've also run the experiments without LFENCE but with different unrolling degrees. I've not made the graphs for these, but I'll discuss the major differences below.

We can conclude the following:

- When the load stride is about less than 128 bytes,

MISS_CAUSES_A_WALKandWALK_COMPLETED_4Kexhibit higher variation across different unrolling degrees. Larger strides have smooth curves whereMISS_CAUSES_A_WALKconverges to 3 or 5 andWALK_COMPLETED_4Kconverges to 3 or 4. LFENCEonly seems to make a difference when the unrolling degree is exactly zero (i.e., there is one load per iteration). WithoutLFENCE, the results (as discussed above) are 5MISS_CAUSES_A_WALKand 4WALK_COMPLETED_4Kevents per page. With theLFENCE, they both become 3 per page. For larger unrolling degrees, the event counts increase gradually on average. When the unrolling degree is at least 1 (i.e., there are at least two loads per iteration),LFENCEmakes essentially no difference. This means that the two new graphs graphs above are same for the case withoutLFENCEexcept when there is one load per iteration. By the way, the weird spike only occurs when the unrolling degree is zero and there is noLFENCE.- In general, unrolling the loop reduces the number of triggered and completed walks, especially when the unrolling degree is small, no matter what the load stride is. Without unrolling,

LFENCEcan be used to essentially get the same effect. With unrolling, there is no need to useLFENCE. In any case, execution time withLFENCEis much higher. So using it to reduce page walks will significantly reduce performance, not improve it.