I do not find the other answers satisfactory. Mainly because you should account for both the time series structure of the data and the cross-sectional information. You can't simply treat the features at each instance as a single series. Doing so, would inevitably lead to a loss of information and is, simply speaking, statistically wrong.

That said, if you really need to go for PCA, you should at least preserve the time series information:

PCA

Following silgon we transform the data into a numpy array:

# your 1000 pandas instances

instances = [pd.DataFrame(data=np.random.normal(0, 1, (300, 20))) for _ in range(1000)]

# transformation to be able to process more easily the data as a numpy array

data=np.array([d.values for d in instances])

This makes applying PCA way easier:

reshaped_data = data.reshape((1000*300, 20)) # create one big data panel with 20 series and 300.000 datapoints

n_comp=10 #choose the number of features to have after dimensionality reduction

pca = PCA(n_components=n_comp) #create the pca object

pca.fit(pre_data) #fit it to your transformed data

transformed_data=np.empty([1000,300,n_comp])

for i in range(len(data)):

transformed_data[i]=pca.transform(data[i]) #iteratively apply the transformation to each instance of the original dataset

Final output shape: transformed_data.shape: Out[]: (1000,300,n_comp).

PLS

However, you can (and should, in my opinion) construct the factors from your matrix of features using partial least squares PLS. This will also grant a further dimensionality reduction.

Let say your data has the following shape. T=1000, N=300, P=20.

Then we have y=[T,1], X=[N,P,T].

Now, it's pretty easy to understand that for this to work we need to have our matrices to be conformable for multiplication. In our case we will have: y=[T,1]=[1000,1], Xpca=[T,P*N]=[1000,20*300]

Intuitively, what we are doing is to create a new feature for each lag (299=N-1) of each of the P=20 basic features.

I.e. for a given instance i, we will have something like this:

Instancei :

x1,i, x1,i-1,..., x1,i-j, x2,i, x2,i-1,..., x2,i-j,..., xP,i, xP,i-1,..., xP,i-j with j=1,...,N-1:

Now, implementation of PLS in python is pretty straightforward.

# your 1000 pandas instances

instances = [pd.DataFrame(data=np.random.normal(0, 1, (300, 20))) for _ in range(1000)]

# transformation to be able to process more easily the data as a numpy array

data=np.array([d.values for d in instances])

# reshape your data:

reshaped_data = data.reshape((1000, 20*300))

from sklearn.cross_decomposition import PLSRegression

n_comp=10

pls_obj=PLSRegression(n_components=n_comp)

factorsPLS=pls_obj.fit_transform(reshaped_data,y)[0]

factorsPLS.shape

Out[]: (1000, n_comp)

What is PLS doing?

To make things easier to grasp we can look at the three-pass regression filter (working paper here) (3PRF). Kelly and Pruitt show that PLS is just a special case of theirs 3PRF:

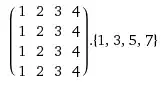

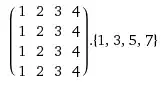

( )

)

Where Z represents a matrix of proxies. We don't have those but luckily Kelly and Pruitt have shown that we can live without it. All we need to do is to be sure that the regressors (our features) are standardized and run the first two regressions without intercept. Doing so, the proxies will be automatically selected.

So, in short PLS allows you to

- Achieve further dimensionality reduction than PCA.

- account for both the cross-sectional variability among the features and time series information of each series when creating the factors.