I am dealing with very large csv-files of 1-10 GB. I have figured out that I need to use the ff-package for reading in the data. However, this does not seem to work. I suspect that the problem is that I have approximately 73 000 columns and since ff reads row-wise, the size is to high for R's memory. My computer has 128 GB of memory, so the hardware should not be a limitation.

This there any way of reading the data column-wise instead?

Note: In each file there is 10 rows of text which needs to be removed before the file can be read as a matrix successfully. I have previously dealt with this by using the read.csv(file,skip=10,header=T,fill=T) on smaller files of same type.

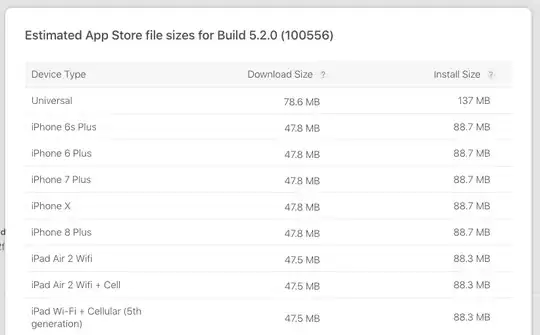

Here is a picture of how a smaller version of the data sets looks in excel: