For downsampling a signal, I use a FIR filter + decimation stage (that's practical a strided convolution). The big advantage of combining filtering and decimation is the reduced computational cost (by the decimation factor).

With a straight forward OpenCL implementation, I am not able to benefit from the decimation. Quite to the contrary: The convolution with a decimation factor of 4 is 25% slower than the full convolution.

Kernel Code:

__kernel void decimation(__constant float *input,

__global float *output,

__constant float *coefs,

const int taps,

const int decimationFactor) {

int posOutput = get_global_id(0);

float result = 0;

for (int tap=0; tap<taps; tap++) {

int posInput = (posOutput * decimationFactor) - tap;

result += input[posInput] * coefs[tap];

}

output[posOutput] = result;

}

I guess it is due to the uncoalesced memory access. Though I can not think of a solution to fix the problem. Any ideas?

Edit: I tried Dithermaster's solution to split the problem into coalesced reads to shared local memory and convolution from local memory:

__kernel void decimation(__constant float *input,

__global float *output,

__constant float *coefs,

const int taps,

const int decimationFactor,

const int bufferSize,

__local float *localInput) {

const int posOutput = get_global_id(0);

const int localSize = get_local_size(0);

const int localId = get_local_id(0);

const int groupId = get_group_id(0);

const int localInputOffset = taps-1;

const int localInputOverlap = taps-decimationFactor;

const int localInputSize = localInputOffset + localSize * decimationFactor;

// 1. transfer global input data to local memory

// read global input to local input (only overlap)

if (localId < localInputOverlap) {

int posInputStart = ((groupId*localSize) * decimationFactor) - (taps-1);

int posInput = posInputStart + localId;

int posLocalInput = localId;

localInput[posLocalInput] = 0.0f;

if (posInput >= 0)

localInput[posLocalInput] = input[posInput];

}

// read remaining global input to local input

// 1. alternative: strided read

// for (int i=0; i<decimationFactor; i++) {

// int posInputStart = (groupId*localSize) * decimationFactor;

// int posInput = posInputStart + localId * decimationFactor - i;

// int posLocalInput = localInputOffset + localId * decimationFactor - i;

// localInput[posLocalInput] = 0.0f;

// if ((posInput >= 0) && (posInput < bufferSize*decimationFactor))

// localInput[posLocalInput] = input[posInput];

// }

// 2. alternative: coalesced read (in blocks of localSize)

for (int i=0; i<decimationFactor; i++) {

int posInputStart = (groupId*localSize) * decimationFactor;

int posInput = posInputStart - (decimationFactor-1) + i*localSize + localId;

int posLocalInput = localInputOffset - (decimationFactor-1) + i*localSize + localId;

localInput[posLocalInput] = 0.0f;

if ((posInput >= 0) && (posInput < bufferSize*decimationFactor))

localInput[posLocalInput] = input[posInput];

}

// 2. wait until every thread completed

barrier(CLK_LOCAL_MEM_FENCE);

// 3. convolution

if (posOutput < bufferSize) {

float result = 0.0f;

for (int tap=0; tap<taps; tap++) {

int posLocalInput = localInputOffset + (localId * decimationFactor) - tap;

result += localInput[posLocalInput] * coefs[tap];

}

output[posOutput] = result;

}

}

Big improvement! But still, the performance does not correlate with the overall operations (not proportional to the decimation factor):

- speedup for full convolution compared to first approach: ~12 %

- computatoin time for decimation compared to full convolution:

- decimation factor 2: 61 %

- decimation factor 4: 46 %

- decimation factor 8: 53 %

- decimation factor 16: 68 %

The performance has a optimum for a decimation factor of 4. Why is that? Any ideas for further improvements?

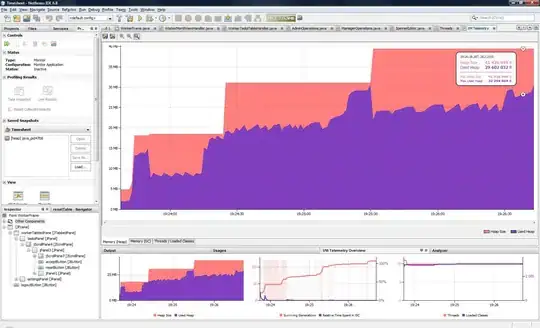

Edit 2: Diagram with shared local memory:

Edit 3: Comparison of the performance for the 3 different implementations