This network contains an input layer and an output layer, with no nonlinearities. The output is just a linear combination of the input.I am using a regression loss to train the network. I generated some random 1D test data according to a simple linear function, with Gaussian noise added. The problem is that the loss function doesn't converge to zero.

import numpy as np

import matplotlib.pyplot as plt

n = 100

alp = 1e-4

a0 = np.random.randn(100,1) # Also x

y = 7*a0+3+np.random.normal(0,1,(100,1))

w = np.random.randn(100,100)*0.01

b = np.random.randn(100,1)

def compute_loss(a1,y,w,b):

return np.sum(np.power(y-w*a1-b,2))/2/n

def gradient_step(w,b,a1,y):

w -= (alp/n)*np.dot((a1-y),a1.transpose())

b -= (alp/n)*(a1-y)

return w,b

loss_vec = []

num_iterations = 10000

for i in range(num_iterations):

a1 = np.dot(w,a0)+b

loss_vec.append(compute_loss(a1,y,w,b))

w,b = gradient_step(w,b,a1,y)

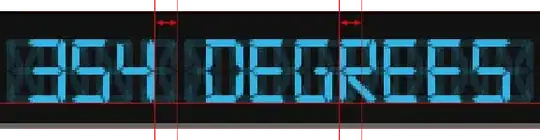

plt.plot(loss_vec)