I'm trying to consume records from a MySQL table which contains 3 columns (Axis, Price, lastname) with their datatypes (int, decimal(14,4), varchar(50)) respectively.

I inserted one record which has the following data (1, 5.0000, John).

The following Java code (which consumes the AVRO records from a topic created by a MySQL Connector in Confluent platform) reads the decimal column: Price, as java.nio.HeapByteBuffer type so i can't reach the value of the column when i receive it.

Is there a way to extract or convert the received data to a Java decimal or double data type?

Here is the MySQL Connector properties file:-

{

"name": "mysql-source",

"config": {

"connector.class": "io.confluent.connect.jdbc.JdbcSourceConnector",

"key.converter": "io.confluent.connect.avro.AvroConverter",

"key.converter.schema.registry.url": "http://localhost:8081",

"value.converter": "io.confluent.connect.avro.AvroConverter",

"value.converter.schema.registry.url": "http://localhost:8081",

"incrementing.column.name": "Axis",

"tasks.max": "1",

"table.whitelist": "ticket",

"mode": "incrementing",

"topic.prefix": "mysql-",

"name": "mysql-source",

"validate.non.null": "false",

"connection.url": "jdbc:mysql://localhost:3306/ticket?

user=user&password=password"

}

}

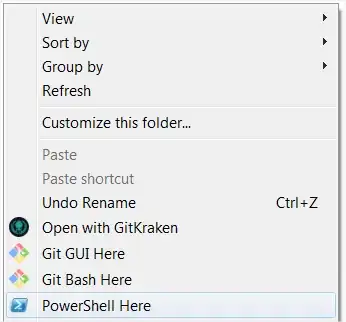

Here is the code:-

public static void main(String[] args) throws InterruptedException,

IOException {

Properties props = new Properties();

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

props.put(ConsumerConfig.GROUP_ID_CONFIG, "group1");

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,

"org.apache.kafka.common.serialization.StringDeserializer");

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,

"io.confluent.kafka.serializers.KafkaAvroDeserializer");

props.put("schema.registry.url", "http://localhost:8081");

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

String topic = "sql-ticket";

final Consumer<String, GenericRecord> consumer = new KafkaConsumer<String, GenericRecord>(props);

consumer.subscribe(Arrays.asList(topic));

try {

while (true) {

ConsumerRecords<String, GenericRecord> records = consumer.poll(100);

for (ConsumerRecord<String, GenericRecord> record : records) {

System.out.printf("value = %s \n", record.value().get("Price"));

}

}

} finally {

consumer.close();

}

}