I am having difficulties trying to get Pentaho PDI to access Hadoop. I did some research and found that Pentaho uses Adapters called Shims, I see these as connectors to Hadoop, the way that JDBC drivers are in Java world for database connectivity.

It seems that in the new version of PDI(v8.1), they have 4 Shims installed by default, they all seem to be specific distributions from the Big Data Companies like HortonWorks, MapR, Cloudera.

When I did further research on Pentaho PDI Big Data, in earlier versions, they had support for "Vanilla" installations of Apache Hadoop.

I just downloaded Apache Hadoop from the open source site, and installed it on Windows.

So my installation of Hadoop would be considered the "Vanilla" Hadoop installation.

But when I tried things out in PDI, I used the HortonWorks Shim, and when I tested things in terms of connection, it said that it did succeed to connect to Hadoop, BUT could not find the default directory and the root directory.

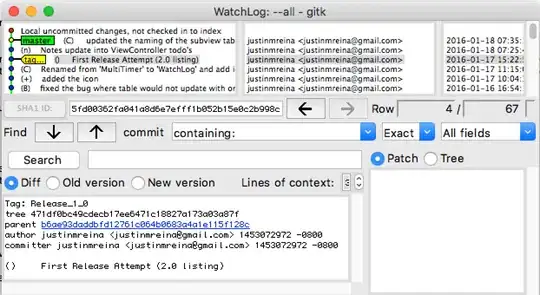

I have screen shots of the errors below:

So, one can see that the errors are coming from the access to directories, it seems: 1)User Home Directory Access 2) Root Directory Access

SO, since I am using the HortonWorks Shim, and i know that it has some default directories(I have used the HortonWorks Hadoop Virtual Machine before).

(1) My Question is: If i use HortonWorks Shim to connect to my "Vanilla" Hadoop installation, do i need to tweet some configuration file to set some default directories. (2) If I cannot use the HortonWorks Shim, how do i install a "Vanilla" Hadoop Shim?

Also I found this related post from year 2013 here on stackoverflow:

Not sure how relevant this link of information is.

Hope someone that has experience with this can help out.

I forgot to add this additional information:

The core-site.xml file that i have for Hadoop, it's contents are this:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

SO that covers it.