I am running Elasticsearch 6.2.4. I have a program that will automatically create an index for me as well as the mappings necessary for my data. For this issue, I created an index called "landsat" but it needs to actually be named "landsat_8", so I chose to reindex. The original "landsat" index has 2 shards and 0 read replicas. The store size is ~13.4gb with ~6.6gb per shard and the index holds just over 515k documents.

I created a new index called "landsat_8" with 5 shards, 1 read replica, and started a reindex with no special options. On a very small Elastic Cloud cluster (4GB RAM), it finished in 8 minutes. It was interesting to see that the final store size was only 4.2gb, yet it still held all 515k documents.

After it was finished, I realized that I failed to create my mappings before reindexing, so I blew it away and started over. I was shocked to find that after an hour, the /cat/_indices endpoint showed that only 7.5gb of data and 154,800 documents had been reindexed. 4 hours later, the entire job seemed to have died at 13.1gb, but it only showed 254,000 documents had been reindexed.

On this small 4gb cluster, this reindex operation was maxing out CPU. I increased the cluster to the biggest one Elastic Cloud offered (64gb ram), 5 shards, 0 RR and started the job again. This time, I set the refresh_interval on the new index to -1 and changed the size for the reindex operation to 2000. Long story short, this job ended in somewhere between 1h10m and 1h19m. However, this time I ended up with a total store size of 25gb, where each shard held ~5gb.

I'm very confused as to why the reindex operation causes such wildly different results in store size and reindex performance. Why, when I don't explicitly define any mappings and let ES automatically create mappings, is the store size so much smaller? And why, when I use the exact same mappings as the original index, is the store so much bigger?

Any advice would be greatly appreciated. Thank you!

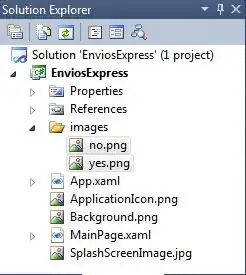

UPDATE 1: Here are the only differences in mappings:

The left image is "landsat" and the right image is "landsat_8". There is a root level "type" field and a nested "properties.type" field in the original "landsat" index. I forgot one of my goals was to remove the field "properties.type" from the data during the reindex. I seem to have been successful in doing so, but at the same time, accidentally renamed the root-level "type" field mapping to "provider", thus "landsat_8" has an unused "provider" mapping and an auto-created "type" mapping.

So there are some problems here, but I wouldn't think this would nearly double my store size...