I am performing a sentiment analysis using R, and I was wondering how to split the wordcloud into two parts, highlighting positive and negative words. I am quite new to R and the online solutions didn't help me. That is the code:

text <- readLines("product1.txt")

library("tm")

library("SnowballC")

library("wordcloud")

library("RColorBrewer")

docs <- Corpus(VectorSource(text))

toSpace <- content_transformer(function (x , pattern ) gsub(pattern, " ", x))

docs <- tm_map(docs, toSpace, "/")

docs <- tm_map(docs, toSpace, "@")

docs <- tm_map(docs, toSpace, "\\|")

docs <- tm_map(docs, content_transformer(tolower))

docs <- tm_map(docs, removeNumbers)

docs <- tm_map(docs, removeWords, stopwords("english"))

docs <- tm_map(docs, removeWords, c("don", "s", "t"))

docs <- tm_map(docs, removePunctuation)

docs <- tm_map(docs, stripWhitespace)

dtm <- TermDocumentMatrix(docs)

m <- as.matrix(dtm)

v <- sort(rowSums(m),decreasing=TRUE)

d <- data.frame(word = names(v),freq=v)

head(d, 10)

set.seed(1234)

wordcloud(words = d$word, freq = d$freq, min.freq = 1,

max.words=200, random.order=FALSE, rot.per=0.35,

colors=brewer.pal(8, "Dark2"))

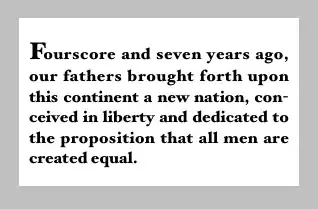

And this is the result I would like to achieve:

Thanks for everyone will help me.

EDIT:

docs <- structure(list(content = c("This product so far has not disappointed. My children love to use it and I like the ability to monitor control what content they see with ease.",

"Great for beginner or experienced person. Bought as a gift and she loves it.",

"Inexpensive tablet for him to use and learn on, step up from the NABI. He was thrilled with it, learn how to Skype on it already.",

"I have had my Fire HD 8 two weeks now and I love it. This tablet is a great value.We are Prime Members and that is where this tablet SHINES. I love being able to easily access all of the Prime content as well as movies you can download and watch laterThis has a 1280/800 screen which has some really nice look to it its nice and crisp and very bright infact it is brighter then the ipad pro costing $900 base model. The build on this fire is INSANELY AWESOME running at only 7.7mm thick and the smooth glossy feel on the back it is really amazing to hold its like the futuristic tab in ur hands."

), meta = structure(list(language = "en"), class = "CorpusMeta"),

dmeta = structure(list(), .Names = character(0), row.names = c(NA,

6L), class = "data.frame")), class = c("SimpleCorpus", "Corpus"

))