This seemed like an interesting question, so I thought I would have a go at answering and have a fully working example which can be used a basis for future development by you and others.

It may not be the right approach, but hopefully it will be useful.

Having said this, I'm not entirely sure why you want to use a Document Browser to allow a user to choose ARWorldMaps. A simpler approach could be to simply store these in CoreData, and allow selection in a UITableView for example. Or incorporate the logic below into something similar e.g. when a custom file is opened, save it to CoreData, and present all received files that way.

Anyway, here is something which can begin your exploration of this topic in more detail. Although, please note that this is no way optimised, although it should be more than enough to point you in the right direction ^______^.

For your information:

ARWorldMap conforms to the NSSecureCoding protocol, so you can convert

a world map to or from a binary data representation using the

NSKeyedArchiver and NSKeyedUnarchiver classes.

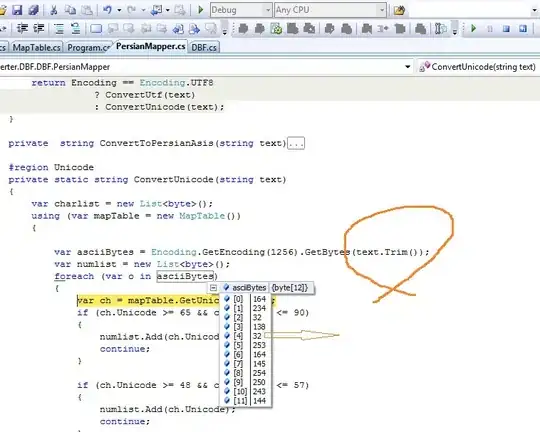

As we want to use a Custom UTI to save our ARWorldMap we first need to set that up in our info.plist file where we set our UTI type to public.data.

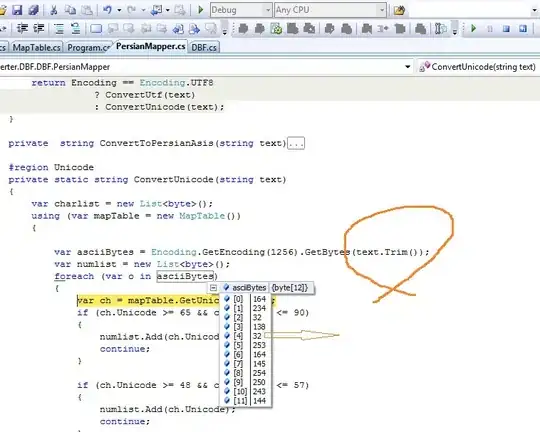

Which looks like this in the project editor:

For more information about doing that there is a good tutorial here from Ray Wenderlich.

Having done this, we need to of course save our ARWorldMap and allow it to be exported. I have created a typealias which is how we will save our data e.g. a key value of String and a value of Data (our ARWorldMap):

typealias BMWorlMapItem = [String: Data]

/// Saves An ARWorldMap To The Documents Directory And Allows It To Be Sent As A Custom FileType

@IBAction func saveWorldMap(){

//1. Attempt To Get The World Map From Our ARSession

augmentedRealitySession.getCurrentWorldMap { worldMap, error in

guard let mapToShare = worldMap else { print("Error: \(error!.localizedDescription)"); return }

//2. We Have A Valid ARWorldMap So Save It To The Documents Directory

guard let data = try? NSKeyedArchiver.archivedData(withRootObject: mapToShare, requiringSecureCoding: true) else { fatalError("Can't Encode Map") }

do {

//a. Create An Identifier For Our Map

let mapIdentifier = "BlackMirrorzMap"

//b. Create An Object To Save The Name And WorldMap

var contentsToSave = BMWorlMapItem()

//c. Get The Documents Directory

let documentDirectory = try self.fileManager.url(for: .documentDirectory, in: .userDomainMask, appropriateFor:nil, create:false)

//d. Create The File Name

let savedFileURL = documentDirectory.appendingPathComponent("/\(mapIdentifier).bmarwp")

//e. Set The Data & Save It To The Documents Directory

contentsToSave[mapIdentifier] = data

do{

let archive = try NSKeyedArchiver.archivedData(withRootObject: contentsToSave, requiringSecureCoding: true)

try archive.write(to: savedFileURL)

//f. Show An Alert Controller To Share The Item

let activityController = UIActivityViewController(activityItems: ["Check Out My Custom ARWorldMap", savedFileURL], applicationActivities: [])

self.present(activityController, animated: true)

print("Succesfully Saved Custom ARWorldMap")

}catch{

print("Error Generating WorldMap Object == \(error)")

}

} catch {

print("Error Saving Custom WorldMap Object == \(error)")

}

}

}

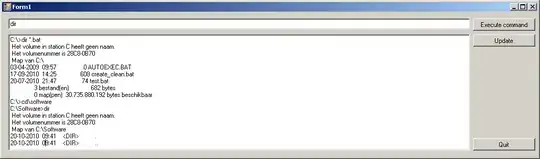

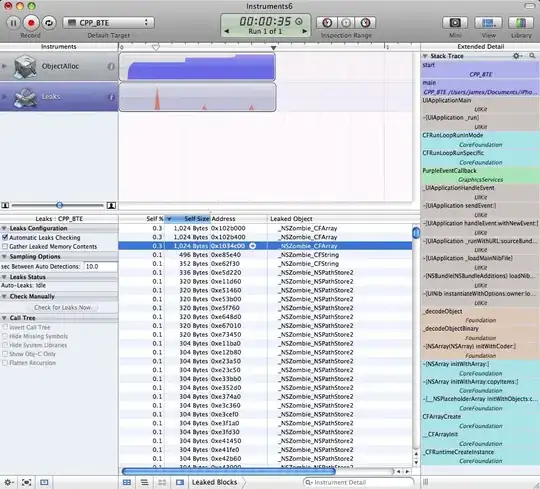

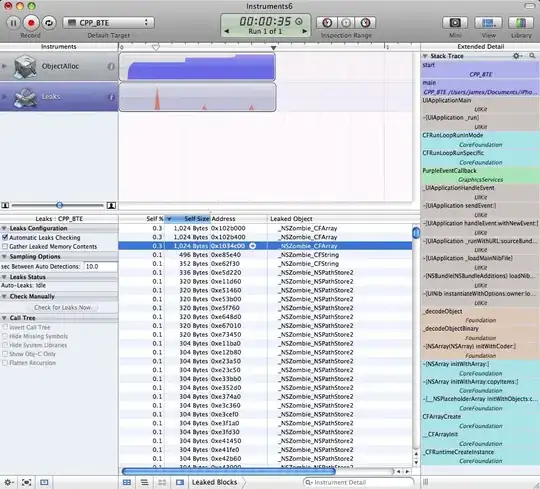

This also saves the data to the Documents Directory on the users device so we can check everything works as expected e.g:

Once the data is saved, we are then presenting the user with a UIActivityAlertController so the user can send the file to their email etc.

Since we can now export our data we need to handle how we receive our data when we we select how to open it with our custom handler:

This is handled in our AppDelegate like so:

//---------------------------

//MARK: - Custom File Sharing

//---------------------------

func application(_ app: UIApplication, open url: URL, options: [UIApplication.OpenURLOptionsKey : Any] = [:]) -> Bool {

//1. List Our Custom File Type Which Will Hold Our ARWorldMap

guard url.pathExtension == "bmarwp" else { return false }

//2. Post Our Data

NotificationCenter.default.post(name: NSNotification.Name(rawValue: "MapReceived"), object: nil, userInfo: ["MapData" : url])

return true

}

As you can see, when our Custom File is received via the AppDelegate a Notification is sent which we will register for in our ViewController's viewDidLoad e.g:

NotificationCenter.default.addObserver(self, selector: #selector(importWorldMap(_:)), name: NSNotification.Name(rawValue: "MapReceived"), object: nil)

Now we have all this setup we of course need to extract the data so it can be used. Which is achieved like this:

/// Imports A WorldMap From A Custom File Type

///

/// - Parameter notification: NSNotification)

@objc public func importWorldMap(_ notification: NSNotification){

//1. Remove All Our Content From The Hierachy

self.augmentedRealityView.scene.rootNode.enumerateChildNodes { (existingNode, _) in existingNode.removeFromParentNode() }

//2. Check That Our UserInfo Is A Valid URL

if let url = notification.userInfo?["MapData"] as? URL{

//3. Convert Our URL To Data

do{

let data = try Data(contentsOf: url)

//4. Unarchive Our Data Which Is Of Type [String: Data] A.K.A BMWorlMapItem

if let mapItem = try? NSKeyedUnarchiver.unarchiveTopLevelObjectWithData(data) as! BMWorlMapItem,

let archiveName = mapItem.keys.first,

let mapData = mapItem[archiveName] {

//5. Get The Map Data & Log The Anchors To See If It Includes Our BMAnchor Which We Saved Earlier

if let unarchivedMap = try? NSKeyedUnarchiver.unarchivedObject(ofClasses: [ARWorldMap.classForKeyedUnarchiver()], from: mapData),

let worldMap = unarchivedMap as? ARWorldMap {

print("Extracted BMWorldMap Item Named = \(archiveName)")

worldMap.anchors.forEach { (anchor) in if let name = anchor.name { print ("Anchor Name == \(name)") } }

//5. Restart Our Session

let configuration = ARWorldTrackingConfiguration()

configuration.planeDetection = .horizontal

configuration.initialWorldMap = worldMap

self.augmentedRealityView.session.run(configuration, options: [.resetTracking, .removeExistingAnchors])

}

}

}catch{

print("Error Extracting Data == \(error)")

}

}

}

Now our data is extracted we just need to reconfigure our Session and load the map.

You will note that I am logging the AnchorNames, as a means of checking whether the process was sucessful, since I create a custom ARAnchor named BMAnchor which I create using a UITapGestureRecognizer like so:

//------------------------

//MARK: - User Interaction

//------------------------

/// Allows The User To Create An ARAnchor

///

/// - Parameter gesture: UITapGestureRecognizer

@objc func placeAnchor(_ gesture: UITapGestureRecognizer){

//1. Get The Current Touch Location

let currentTouchLocation = gesture.location(in: self.augmentedRealityView)

//2. Perform An ARSCNHiteTest For Any Feature Points

guard let hitTest = self.augmentedRealityView.hitTest(currentTouchLocation, types: .featurePoint).first else { return }

//3. Create Our Anchor & Add It To The Scene

let validAnchor = ARAnchor(name: "BMAnchor", transform: hitTest.worldTransform)

self.augmentedRealitySession.add(anchor: validAnchor)

}

When this is extracted I then generate a model by means on the ARSCNViewDelegate which again is useful for checking that our process was successful:

//-------------------------

//MARK: - ARSCNViewDelegate

//-------------------------

extension ViewController: ARSCNViewDelegate{

func renderer(_ renderer: SCNSceneRenderer, nodeFor anchor: ARAnchor) -> SCNNode? {

//1. Check We Have Our BMAnchor

if let name = anchor.name, name == "BMAnchor" {

//2. Create Our Model Node & Add It To The Hierachy

let modelNode = SCNNode()

guard let sceneURL = SCNScene(named: "art.scnassets/wavingState.dae") else { return nil }

for childNode in sceneURL.rootNode.childNodes { modelNode.addChildNode(childNode) }

return modelNode

}else{

return SCNNode()

}

}

}

Hopefully this will point you in the right direction...

And here is a full working example for you and everyone else to experiment with and adapt to your needs: Sharing ARWorldMaps

All you need to do, is wait for the Session to start running, place your model, then press Save. When presented with the Alert email it to yourself, and then check your email, and click on the .bmarwp file, which will autoload in the app ^_________^,

In a Document Based App, you can then use the Custom file types quite easily.

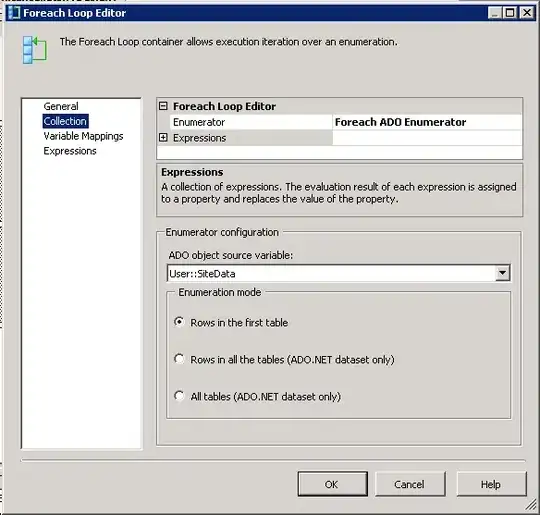

The following are the requirements for your info.plist:

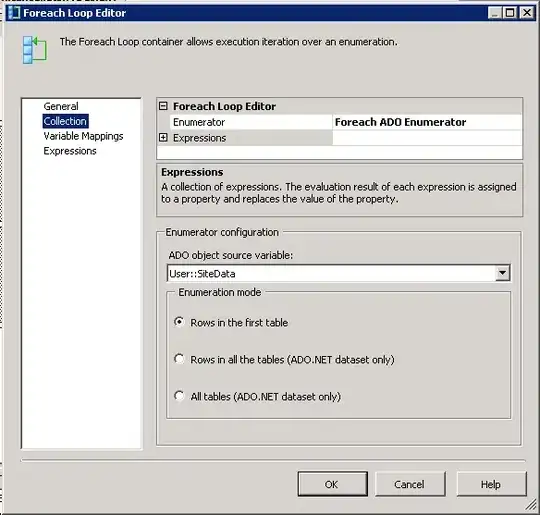

(a) Document Types:

(b) Exported Type UTI's:

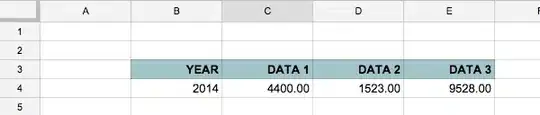

With the info section of your project looking like so:

As such you will eventually end up with different screens like so:

Which works in both a basic document based app I created and also in the Apple Files app.

Hope it helps...