I am confused by what airflow does if a dagrun fails. The behaviour I want to achieve is:

- Regular triggers of the DAG (hourly)

- Retries for the task

- If a task fails n retries, send an email about the failure

- When the next hourly trigger comes round, trigger a new dagrun as if nothing had failed.

These are my dag arguments and task arguments:

task defaults:

'depends_on_past': True,

'start_date': airflow.utils.dates.days_ago(2),

'email': ['email@address.co.uk'],

'email_on_failure': True,

'email_on_retry': False,

'retries': 1,

'retry_delay': timedelta(minutes=5),

'wait_for_downstream': False,

dag arguments:

schedule_interval=timedelta(minutes=60),

catchup=False,

max_active_runs=1

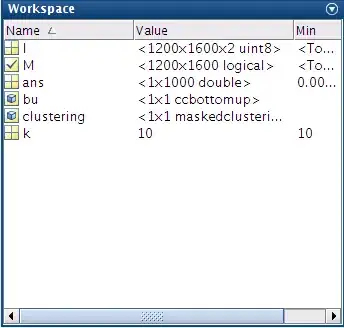

I think I am misunderstanding some of these arguments because it appears to me that if a task fails n times (i.e. the dagrun fails), then the next dagrun gets scheduled but just sits in the running state forever and no further dagruns ever succeed (or even get scheduled). for example, here are the dagruns (I didn't know where to find the text based scheduler logs like in this question) where the dags are scheduled to run every 5 minutes instead of every hour:

The execution runs every 5 minutes until the failure, after that the last execution is just in the running state and has been so for the past 30 minutes.

What have I done wrong?

I should add that restarting the scheduler doesn't help and neither does manually setting that running task to failed...