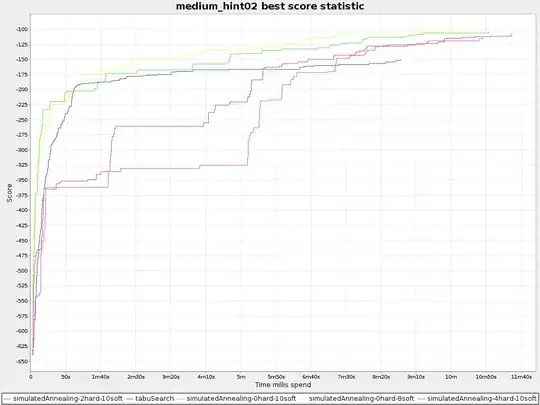

I'm writing a simulated annealing program, and having some trouble debugging it. Any advice would be welcome.

First of all, the output is not deterministic, so I've taken to running it a hundred times and looking at the mean and standard deviation.

But then it takes ages and ages (> 30 minutes) to finish a single test case.

Usually, I'd try to cut the input down, but reducing the number of iterations directly decreases the accuracy of the result in ways which are not entirely predictable. For example, the cooling schedule is an exponential decay scaled to the number of iterations. Reducing the number of separate runs makes the output very unreliable (one of the bugs I'm trying to hunt down is an enormous variance between runs).

I know premature optimization is the root of all evil, and surely it must be premature to optimize before the program is even correct, but I'm seriously thinking of rewriting this is a faster language (Cython or C) knowing I'd have to port it back to Python in the end for submission.

So, is there any way to test a simulated annealing algorithm better than what I have now? Or should I just work on something else between tests?

Disclosure: this is an assignment for coursework, but I'm not asking you to help me with the actual simulation.