Struggling with this for sometime now, and applogies I changed the query name for the question to getDeviceReadings, I have been using getAllUserDevices (sorry for any confusion)

type Device {

id: String

device: String!

}

type Reading {

device: String

time: Int

}

type PaginatedDevices {

devices: [Device]

readings: [Reading]

nextToken: String

}

type Query {

getDevicesReadings(nextToken: String, count: Int): PaginatedDevices

}

Then I have a resolver on the query getDevicesReadings which works fine and returns all the devices a user has so far so good

{

"version": "2017-02-28",

"operation": "Query",

"query" : {

"expression": "id = :id",

"expressionValues" : {

":id" : { "S" : "${context.identity.username}" }

}

}

#if( ${context.arguments.count} )

,"limit": ${context.arguments.count}

#end

#if( ${context.arguments.nextToken} )

,"nextToken": "${context.arguments.nextToken}"

#end

}

now I want to return all the readings that devices has based on the source result so I have a resolver on getDevicesReadings/readings

#set($ids = [])

#foreach($id in ${ctx.source.devices})

#set($map = {})

$util.qr($map.put("device", $util.dynamodb.toString($id.device)))

$util.qr($ids.add($map))

#end

{

"version" : "2018-05-29",

"operation" : "BatchGetItem",

"tables" : {

"readings": {

"keys": $util.toJson($ids),

"consistentRead": true

}

}

}

With a response mapping like so ..

$utils.toJson($context.result.data.readings)

I run a query

query getShit{

getDevicesReadings{

devices{

device

}

readings{

device

time

}

}

}

this returns the following results

{

"data": {

"getAllUserDevices": {

"devices": [

{

"device": "123"

},

{

"device": "a935eeb8-a0d0-11e8-a020-7c67a28eda41"

}

],

"readings": [

null,

null

]

}

}

}

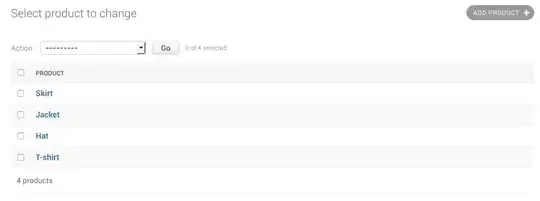

As you can see on the image the primary partition key is device on the readings table I look at the logs and I have the following

Sorry if you cant read the log it basically says that there are unprocessedKeys

and the following error message

"message": "The provided key element does not match the schema (Service: AmazonDynamoDBv2; Status Code: 400; Error Code: ValidationException; Request ID: 0H21LJE234CH1GO7A705VNQTJVVV4KQNSO5AEMVJF66Q9ASUAAJG)",

I'm guessing some how my mapping isn't quite correct and I'm passing in readings as my keys ?

Any help greatly appreciated