I have a task to classify seeds depending on the defect. I have around 14k images in 7 classes (they are not equal size, some classes have more photos, some have less). I tried to train Inception V3 from scratch and I've got around 90% accuracy. Then I tried transfer learning using pre-trained model with ImageNet weights. I imported inception_v3 from applications without top fc layers, then added my own like in documentation. I ended with the following code:

# Setting dimensions

img_width = 454

img_height = 227

###########################

# PART 1 - Creating Model #

###########################

# Creating InceptionV3 model without Fully-Connected layers

base_model = InceptionV3(weights='imagenet', include_top=False, input_shape = (img_height, img_width, 3))

# Adding layers which will be fine-tunned

x = base_model.output

x = GlobalAveragePooling2D()(x)

x = Dense(1024, activation='relu')(x)

predictions = Dense(7, activation='softmax')(x)

# Creating final model

model = Model(inputs=base_model.input, outputs=predictions)

# Plotting model

plot_model(model, to_file='inceptionV3.png')

# Freezing Convolutional layers

for layer in base_model.layers:

layer.trainable = False

# Summarizing layers

print(model.summary())

# Compiling the CNN

model.compile(optimizer = 'adam', loss = 'categorical_crossentropy', metrics = ['accuracy'])

##############################################

# PART 2 - Images Preproccessing and Fitting #

##############################################

# Fitting the CNN to the images

train_datagen = ImageDataGenerator(rescale = 1./255,

rotation_range=30,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True,

preprocessing_function=preprocess_input,)

valid_datagen = ImageDataGenerator(rescale = 1./255,

preprocessing_function=preprocess_input,)

train_generator = train_datagen.flow_from_directory("dataset/training_set",

target_size=(img_height, img_width),

batch_size = 4,

class_mode = "categorical",

shuffle = True,

seed = 42)

valid_generator = valid_datagen.flow_from_directory("dataset/validation_set",

target_size=(img_height, img_width),

batch_size = 4,

class_mode = "categorical",

shuffle = True,

seed = 42)

STEP_SIZE_TRAIN = train_generator.n//train_generator.batch_size

STEP_SIZE_VALID = valid_generator.n//valid_generator.batch_size

# Save the model according to the conditions

checkpoint = ModelCheckpoint("inception_v3_1.h5", monitor='val_acc', verbose=1, save_best_only=True, save_weights_only=False, mode='auto', period=1)

early = EarlyStopping(monitor='val_acc', min_delta=0, patience=10, verbose=1, mode='auto')

#Training the model

history = model.fit_generator(generator=train_generator,

steps_per_epoch=STEP_SIZE_TRAIN,

validation_data=valid_generator,

validation_steps=STEP_SIZE_VALID,

epochs=25,

callbacks = [checkpoint, early])

But I've got terrible results: 45% accuracy. I thought it should be better. I have some hypothesis what could go wrong:

- I trained from scratch on scaled images (299x299) and on non-scaled while transfer-learning (227x454) and it failed something (or maybe I failed dimensions order).

- While transfer-learning I used

preprocessing_function=preprocess_input(found article on the web that it is extremely important, so I decided to add that). - Added

rotation_range=30,width_shift_range=0.2,height_shift_range=0.2, andhorizontal_flip = Truewhile transfer learning to augment data even more. - Maybe Adam optimizer is a bad idea? Should I try RMSprop for example?

- Should I fine-tune some conv layers with SGD with small learning rate too?

Or did I failed something else?

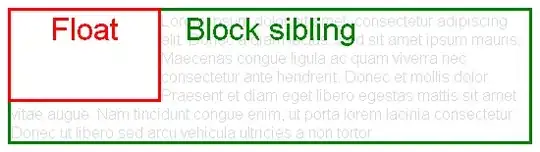

EDIT: I post a plot of training history. Maybe it contains valuable information:

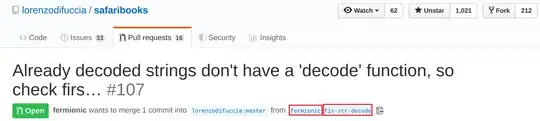

EDIT2: With changing parameters of InceptionV3:

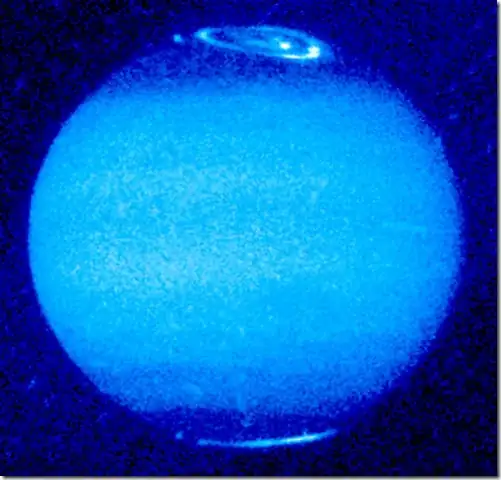

VGG16 for comparison: