this basically shows the underlying geometry for estimating the depth.

As you said, we have camera pose Q, and we are picking a point X from world, X_L is it's projection on left image, now, with Q_L, Q_R and X_L, we are able to make up this green colored epipolar plane, the rest job is easy, we search through points on line (Q_L, X), this line exactly describe the depth of X_L, with different assumptions: X1, X2,..., we can get different projections on the right image

Now we compare the pixel intensity difference from X_L and the reprojected point on right image, just pick the smallest one and that corresponding depth is exactly what we want.

Pretty easy hey? Truth is it's way harder, image is never strictly convex:

This makes our matching extremely hard, since the non-convex function will result any distance function have multiple critical points (candidate matches), how do you decide which one is the correct one?

However, people proposed path based match to handle this problem, methods like: SAD, SSD, NCC, they are introduced to create the distance function as convex as possible, still, they are unable to handle large scale repeated texture problem and low texture problem.

To solve this, people start to search over a long range in the epipolar line, and suddenly found that we can describe this whole distribution of matching metrics into a distance along the depth.

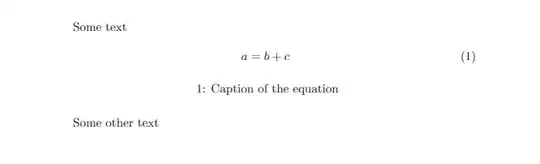

The horizontal axis is depth, and the vertical axis is matching metric score, and this illustration lead us found the depth filter, and we usually describe this distribution with gaussian, aka, gaussian depth filter, and use this filter to discribe the uncertainty of depth, combined with the patch matching method, we can roughly get a proposal.

Now what, let's use some optimization tools, like GN or gradient descent to finally refine the depth estimaiton.

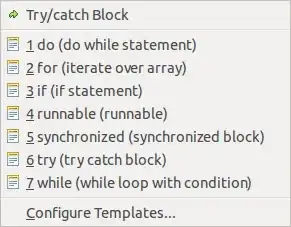

To sum up, the total process of the depth estimation is like the following steps:

- assume all depth in all pixel following a initial gaussian distribution

- start search through epipolar line and reproject points into target frame

- triangulate depth and calculate the uncertainty of the depth from depth filter

- run 2 and 3 again to get a new depth distribution and merge with previous one, if they converged then break, ortherwise start again from 2.