I'd be thankful for all thoughts, tips or links on this:

Using TF 1.10 and the recent object detection-API (github, 2018-08-18) I can do box- and mask prediction using the PETS dataset as well as using my own proof of concept data-set:

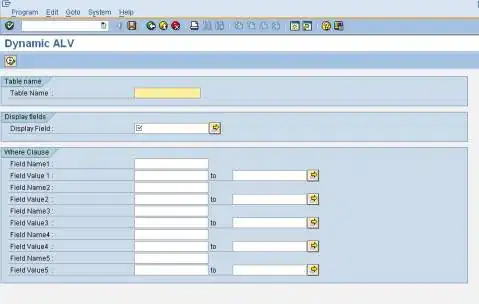

But when training on the cityscapes traffic signs (single class) I am having troubles to achieve any results. I have adapted the anchors to respect the much smaller objects and it seems the RPN is doing something useful at least:

Anyway, the box predictor is not going into action at all. That means I am not getting any boxes at all - not to ask for masks.

My pipelines are mostly or even exactly like the sample configs. So I'd expect either problems with the specific type of data or a bug .

Would you have any tips/links how to (either)

- visualize the RPN results when using 2 or 3 stages? (Using only one stage does that, but how would one force that?)

- train the RPN first and continue with boxes later?

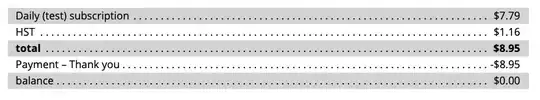

- investigate where/why the boxes get lost? (having predictions with zero scores while evaluation yields zero classification error)