I've been following this tutorial on the Tensorflow Object Detection API, and I've successfully trained my own object detection model using Google's Cloud TPUs.

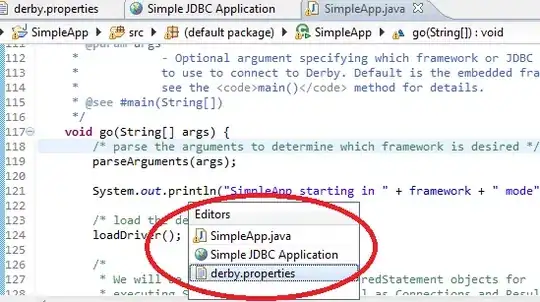

However, the problem is that on Tensorboard, the plots I'm seeing only have 2 data points each (so it just plots a straight line), like this:

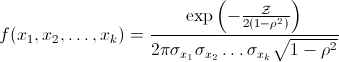

...whereas I want to see more "granular" plots like these below, which are much more detailed:

The tutorial I've been following acknowledges that this issue is caused by the fact that TPU training requires very few steps to train:

Note that these graphs only have 2 points plotted since the model trains quickly in very few steps (if you’ve used TensorBoard before you may be used to seeing more of a curve here)

I tried adding save_checkpoints_steps=50 in the file model_tpu_main.py (see code fragment below), and when I re-ran training, I was able to get a more granular plot, with 1 data point every 300 steps or so.

config = tf.contrib.tpu.RunConfig(

# I added this line below:

save_checkpoints_steps=50,

master=tpu_grpc_url,

evaluation_master=tpu_grpc_url,

model_dir=FLAGS.model_dir,

tpu_config=tf.contrib.tpu.TPUConfig(

iterations_per_loop=FLAGS.iterations_per_loop,

num_shards=FLAGS.num_shards))

However, my training job is actually saving a checkpoint every 100 steps, rather than every 300 steps. Looking at the logs, my evaluation job is running every 300 steps. Is there a way I can make my evaluation job run every 100 steps (whenever there's a new checkpoint) so that I can get more granular plots on Tensorboard?