My team’s been thrown into the deep end and have been asked to build a federated search of customers over a variety of large datasets which hold varying degrees of differing data about each individuals (and no matching identifiers) and I was wondering how to go about implementing it.

I was thinking Apache Nifi would be a good fit to query our various databases, merge the result, deduplicate the entries via an external tool and then push this result into a database which is then queried for use in an Elasticsearch instance for the applications use.

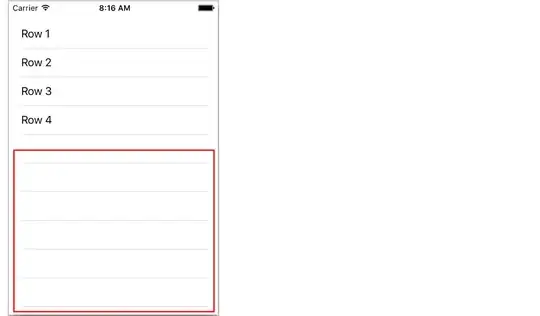

So roughly speaking something like this:-

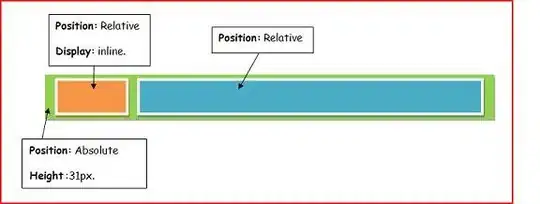

For examples sake the following data then exists in the result database from the first flow :-

Then running https://github.com/dedupeio/dedupe over this database table which will add cluster ids to aid the record linkage, e.g.:-

Second flow would then query the result database and feed this result into Elasticsearch instance for use by the applications API for querying which would use the cluster id to link the duplicates.

Couple questions:-

How would I trigger dedupe to run on the merged content was pushed to the database?

The corollary question - how would the second flow know when to fetch results for pushing into Elasticsearch? Periodic polling?

I also haven’t considered any CDC process here as the databases will be getting constantly updated which I'd need to handle, so really interested if anybody had solved a similar problem or used different approach (happy to consider other technologies too).

Thanks!