I'm trying to explain a 101.10 difference in value.

303'300'000/12 is about 25'275'000.

However, according to MS-SQL, it's 25'274'898.90.

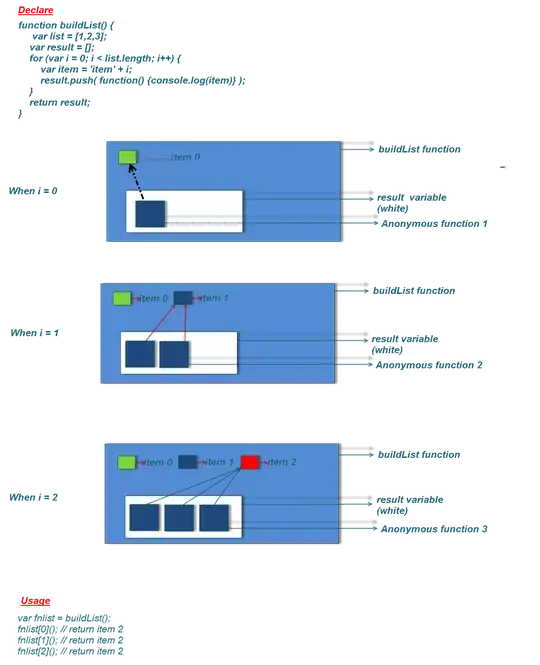

Consider this (dumbed down) SQL-statement:

SELECT

303300000.00/12.0 AS a

,1.0/12.0*303300000.00 AS b

,1.0/12.0 AS omg

,1.0/CAST(12.0 AS float) AS expected

,0.083333*303300000.0 AS r1

,0.083333333333333300 * 303300000.0 AS r2

I thought since I wrote 1.0/12.0 it would cast as float

(which is itselfs dumb, but that's another story),

but apparently, it's decimal (8,6)

CREATE VIEW dbo._Test

AS

SELECT 1.0/12.0 as xxx

SELECT

COLUMN_NAME

,DATA_TYPE

,NUMERIC_PRECISION

,NUMERIC_SCALE

FROM INFORMATION_SCHEMA.COLUMNS WHERE TABLE_NAME = '_Test'

DROP VIEW dbo._Test

Is there any reason to this madness ?

How does it determine the precision ?

Is there any notation to force float, without the need for a cast statement ?

If I run the same query on PostgreSQL, pg does it right...