I have a zip file in my blob of size 900mb, so how can I unzip the same in the Azure platform itself?

Have tried using blob to blob unzipping using logical app. But there the maximum file size is 50mb only.

Any inputs is appreciated.

I have a zip file in my blob of size 900mb, so how can I unzip the same in the Azure platform itself?

Have tried using blob to blob unzipping using logical app. But there the maximum file size is 50mb only.

Any inputs is appreciated.

You have the option to go with Azure Data Factory. Azure Data Factory supports to decompress data during copy. Specify the compression property in an input dataset and the copy activity reads the compressed data from the source and decompress it.

Also,there is an option to specify the property in an output dataset which would make the copy activity compress then write data to the sink.

For your use-case - you need to read a compressed (example GZIP) data from an Azure blob, decompress it and write result data to an Azure blob, so define the input Azure Blob dataset with compression type set to GZIP.

Link - ADF - compression support

As Abhishek mentioned, you could use ADF. And you could use copy data tool to help you create the pipeline.

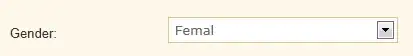

For example, if you just want to unzip a file, you could use the following settings.