When doing a TCP analysis of the traffic between my web servers and database servers I see the network buffers (TCP Window) filling up frequently. The web servers then send TCP messages to the database server telling it that its buffers are full an not to send more data until given an update.

For example, this is the size of the network buffer in bytes for one of the more long lived connections to the database server over time:

The web servers are running a .NET 4.0 application running in IIS integrated mode on Windows 2008 R2 web servers. The SQL server is a 2008 R2 server.

My interpretation of this is that the SQL server is returning data to the web servers faster then the application on the web server can collect the data from the buffers. I have tried tuning just about everything I can in the network drivers to work around this issue. In particular increasing the RSS queues, disabling interrupt moderation, and setting Windows 2008 R2 server to increase the buffer size more aggressively.

So if my interpretation is correct that leaves me wondering about two possibilities:

- Is there any way in .NET to tell it to increase the size of the network buffers? The "enhanced 2008 R2 TCP stack" is rarely deciding to enable window scaling (Making the buffer bigger than 65 kBytes) for this connection (probably due to the low latency). It looks like the ability to manually set this system wide is gone in Windows server 2008 r2 (There used to be registry entries that are now ignored). So is there a way I can force this in the code?

- Is there anything that can be tuned that would speed up the rate at which the application reads information for the network buffers, in particular for the SQL connections?

Edit:

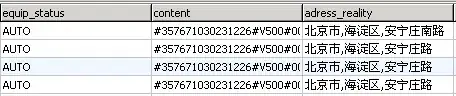

Requested DMV Query cutting off at ASYNC_NETWORK_IO:

SELECT * FROM sys.dm_os_wait_stats ORDER BY waiting_tasks_count desc;

wait_type waiting_tasks_count wait_time_ms max_wait_time_ms signal_wait_time_ms CXPACKET 1436226309 2772827343 39259 354295135 SLEEP_TASK 231661274 337253925 10808 71665032 LATCH_EX 214958564 894509148 11855 84816450 SOS_SCHEDULER_YIELD 176997645 227440530 2997 227332659 ASYNC_NETWORK_IO 112914243 84132232 16707 16250951