I second the comments -this would be much easier if you gave a slightly more verbose description of your problem and most importantly add a minimal working example.

As far as I understand though, you want to solve a linear system of equations with some additional assumptions about the fitted parameters. This can be done by expressing them as an optimisation problem.

Here for example I've fitted a quadratic where the coefficients of x^0 and x^1 are both dependant on some other arbitrary parameter a (for this example a = 6 - that's what we're trying to recover from the data).

There are 2 different approaches plotted here - unconstrained and constrained optimisation. You can see that all of them approximate our data well, but only the constrained optimisation recovers a value of a close to 6 (5.728). Anyway, have a look at the code and I hope this helps with your problem somewhat. If you can, try to use the reduced number of parameters approach. It is always better to reduce your fitting problems to lower dimensional spaces if possible - much less risk of local minima and much faster solutions.

Here is the code:

close all; clear; clc;

%% Generate test data

x = 1:100;

rng(0); % Seed rng

% Polynomial where we know something about the parameters - we know that if

% the coefficient of x^0 is 'a'm then the coefficient of x^1 is (1-a).

a = 6;

y = a + (1-a).*x + 0.1*x.^2;

y = y + 30*randn(size(x)); % Add some noise

%% Fit with mrdivide and Vandermonde matrix

A = vander(x); A = A(:,end-2:end)';

b = y;

k1 = b/A;

%% Fit with an unconstrained optimiser

f = @(k) optimfun1(x,y,k);

k0 = [1 1 1]; % Starting point

k2 = fminsearch(f,k0);

%% Fit with a constrained optimiser

f = @(k) optimfun1(x,y,k);

k0 = [1 1 1];

Aeq = [0 1 1]; beq = 1; % Constrain k2 = 1 - k3 which is equivalent to k2+k3 = 1

k3 = fmincon(f,k0,[],[],Aeq,beq);

%% Fit with a reduced number of parameters

f = @(k) optimfun2(x,y,k);

k0 = [1 1];

k4 = fminsearch(f,k0);

k4 = [k4 1-k4(2)]; % Infer the last coeff.

%% Plot

plot(x,y,'ko');

hold on

plot(x,polyval(k1,x));

plot(x,polyval(k2,x));

plot(x,polyval(k3,x));

plot(x,polyval(k4,x));

legend('k^{dat} = [6.000 -5.000 0.100];',...

sprintf('k^{unc}_1 = [%.3f %.3f %.3f]',flipud(k1(:))),...

sprintf('k^{unc}_2 = [%.3f %.3f %.3f]',flipud(k2(:))),...

sprintf('k^{cns}_1 = [%.3f %.3f %.3f]',flipud(k3(:))),...

sprintf('k^{cns}_2 = [%.3f %.3f %.3f]',flipud(k4(:))),...

'location','northwest');

grid on;

xlabel('x');

ylabel('f(x)');

title(sprintf('f(x) = a + (1-a)x + 0.1x^2; a = %d',a));

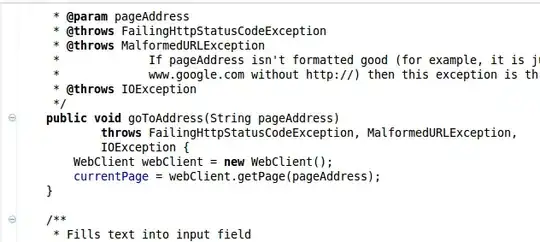

function diff = optimfun1(x,y,k)

yfit = polyval(k,x);

dy = yfit-y;

diff = sum(dy.^2); % Sum of squared residuals

end

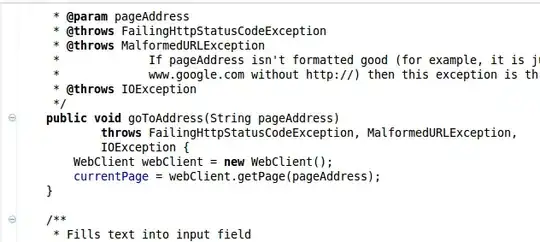

function diff = optimfun2(x,y,k)

k = [k 1-k(2)]; % Infer the last coeff.

yfit = polyval(k,x);

dy = yfit-y;

diff = sum(dy.^2);

end