TL;DR - This is my suggestion:

nI = numel(idx);

sz = size(m);

x = sparse( 1:nI, idx, m(sub2ind( size(m), 1:numel(idx), idx )), sz(1), sz(2), nI);

The rest of the post discusses why it works better.

Seeing how the desired output matrix consists mostly of zeros, this practically begs the use of sparse matrices! Not only should this increase performance (especially for larger matrices), it should be much more memory friendly.

I'm going to add two functions to Wolfie's benchmark:

function x = f_sp_loop( m, idx )

nI = numel(idx);

sz = size(m);

x = spalloc( sz(1), sz(2), nI ); % Initialize a sparse array.

for indI = 1:nI

x( indI, idx(indI) ) = m( indI, idx(indI) ); % This generates a warning (inefficient)

end

end

function x = f_sp_sub2ind( m, idx )

nI = numel(idx);

sz = size(m);

x = sparse( 1:nI, idx, m(sub2ind( size(m), 1:numel(idx), idx )), sz(1), sz(2), nI);

end

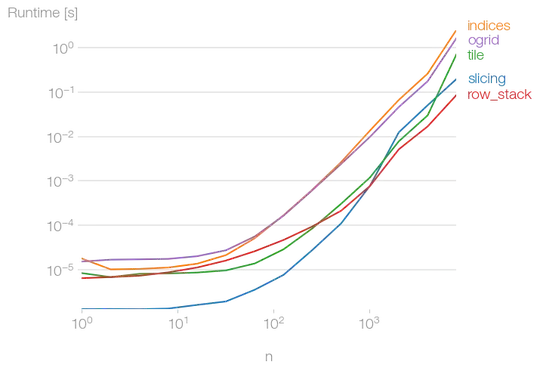

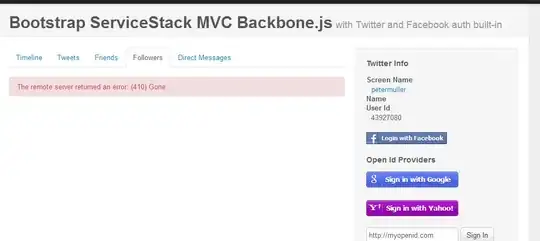

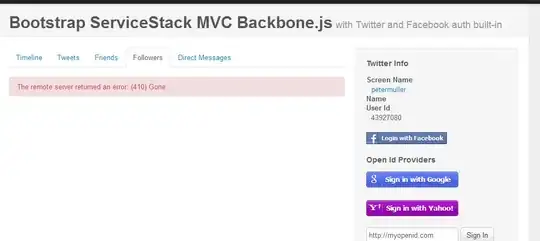

The difference, essentially, is that we're not preallocating the output as an array of zeros, but rather as a sparse array. The result of the benchmark1 is as follows:

... this raises a question - how come is the sparse approach about an order of magnitude faster?

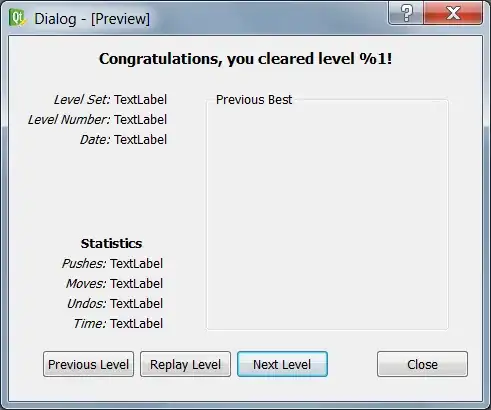

To answer this, we should look at the actual runtime distribution inside the benchmarking function, which we can get from the profiler. To get even more information, we can make the profiler output memory consumption info, using profile('-memory','on'). After running a somewhat shorter version2 of the benchmark, which only runs for the top value of k, we get:

So we can conclude several things:

- The vast majority of runtime is spent on allocating and freeing memory, which is why the algorithms appear to have almost identical performance. Therefore, if we cut down on memory allocations, we directly save time (big plus for

sparse!).

- Even though the

sub2ind and loop approaches appear the same, we can still establish a "winner" among the two (see purple box on the bottom image) - sub2ind! It's 32ms for sub2ind vs 41ms for the loop.

The sparse loop approach is unsurprisingly slow, as we were warned by mlint:

Explanation

Code Analyzer detects an indexing pattern for a sparse array that is likely to be slow. An assignment that changes the nonzero pattern of a sparse array can cause this error because such assignments result in considerable overhead.

Suggested Action

If possible, build sparse arrays using sparse as follows, and do not use indexed assignments (such as C(4) = B) to build them:

- Create separate index and value arrays.

- Call sparse to assemble the index and value arrays.

If you must use indexed assignments to build sparse arrays, you can optimize performance by first preallocating the sparse array with spalloc.

If the code changes only array elements that are already nonzero, then the overhead is reasonable. Suppress this message as described in Adjust Code Analyzer Message Indicators and Messages.

For more information, see “Constructing Sparse Matrices”.

- The approach which combines the best of both worlds, meaning the memory savings of

sparse and the vectorization of sub2ind, appears to be the best one with a mere 3ms runtime!

1 Code for making the chart:

function q51605093()

K = 15; % Max loop variable

T = zeros( K, 4 ); % Timing results

for k = 1:K

M = 2^(k-1); N = 2^k; % size of matrix

m = rand( M, N ); % random matrix

idx = randperm( N, M ); % column indices

% Define anonymous functions with no inputs, for timeit, and run

f = cell(4,1);

f{1} = @() f_sub2ind( m, idx );

f{2} = @() f_loop( m, idx );

f{3} = @() f_sp_loop( m, idx );

f{4} = @() f_sp_sub2ind( m, idx );

T(k,:) = cellfun(@timeit, f);

if k == 5 % test equality during one of the runs

R = cellfun(@feval, f, 'UniformOutput', false);

assert(isequal(R{:}));

end

end

% Plot results

figure();

semilogy( (1:K).', T, 'linewidth', 2 ); grid on; xticks(0:K);

legend( {'sub2ind', 'loop', 'sp\_loop', 'sp\_sub2ind'}, 'Location', 'NorthWest' );

xlabel( 'k, where matrix had 2^{(k-1)} rows and 2^k columns' );

ylabel( 'function time (s)' )

end

function x = f_sub2ind( m, idx )

% Using the in-built sub2ind to generate linear indices, then indexing

lin_idx = sub2ind( size(m), 1:numel(idx), idx );

x = zeros( size(m) );

x( lin_idx ) = m( lin_idx );

end

function x = f_loop( m, idx )

% Directly indexing in a simple loop

x = zeros( size(m) );

for ii = 1:numel(idx)

x( ii, idx(ii) ) = m( ii, idx(ii) );

end

end

function x = f_sp_loop( m, idx )

nI = numel(idx);

sz = size(m);

x = spalloc( sz(1), sz(2), nI ); % Initialize a sparse array.

for indI = 1:nI

x( indI, idx(indI) ) = m( indI, idx(indI) ); % This generates a warning (inefficient)

end

end

function x = f_sp_sub2ind( m, idx )

nI = numel(idx);

sz = size(m);

x = sparse( 1:nI, idx, m(sub2ind( size(m), 1:numel(idx), idx )), sz(1), sz(2), nI);

end

2 Code for profiling:

function q51605093_MB()

K = 15; % Max loop variable

M = 2^(K-1); N = 2^K; % size of matrix

m = rand( M, N ); % random matrix

idx = randperm( N, M ); % column indices

% Define anonymous functions with no inputs, for timeit, and run

f = cell(4,1);

f{1} = f_sub2ind( m, idx );

f{2} = f_loop( m, idx );

f{3} = f_sp_loop( m, idx );

f{4} = f_sp_sub2ind( m, idx );

% assert(isequal(f{:}));

end

... the rest is the same as above